|

Accordance

Question: You have completed the review of this classical theory of information (in three scripts of "Information Theory") with a review of the new one, can you say so? Where does these theorems come as so similar, what is the difference, why?

Answer: Yes, such a similarity is surprising considering that the two theories are based on seemingly completely different principles. Classical information theory is an extension of the deterministic concept, otherwise the (unofficial) standard of modern science, while mine begins with the objectivity of chance. The world of the former is like a chess game, one move at a time takes place alternately between two players, subject and object, independently of the "outside world" (strictly isolated other dimensions), in contrast to my ergodic approach and emphasis on multiprocessing. The former reveals a world of muted certainty, against the latter of declared uncertainty.

It seems that if the first is true then the second is not and vice versa, that placing them side by side will reveal the discrepancy and provide us with definitive proof of the existence of real coincidence. At least that's what I thought at first. But, alas, it turns out that both theories are correct! And now what? I think there are two reasons behind so many similarities.

First, there are no coincidences at the macro level. More precisely, they are fewer and fewer in the world of ever-increasing numbers, so that on a scale from ultimate non-causality to ultimate causality, they are relatively accurate. However, every exact theory (geometry with algebra, algebra with analysis, this one with probability and so on) is in agreement (not in contradiction) with every other exact theory, moreover every part of an exact theory is in agreement with every part of every exact theory.

Second, that "objective randomness" is also a relative term. The hunter who hunts game knows something about traps, precisely what the game does not know and which is "objective uncertainty" for the animal. We therefore communicate (interact) by exchanging information (actions), because we never have everything we need, which we cannot get by "pure thinking" (without interactions). That coincidence is relative, because one (subject, object, situation) knows something that the other does not, but also absolute, because there is no one who knows everything. It is layered, so it allows us to "advance" in knowledge.

Second, that "objective randomness" is also a relative term. The hunter who hunts game knows something about traps, precisely what the game does not know and which is "objective uncertainty" for the animal. We therefore communicate (interact) by exchanging information (actions), because we never have everything we need, which we cannot get by "pure thinking" (without interactions). That coincidence is relative, because one (subject, object, situation) knows something that the other does not, but also absolute, because there is no one who knows everything. It is layered, so it allows us to "advance" in knowledge.

How deeply mathematically correct this explanation is, the number π = 3.14159265359... can demonstrate, as well as the decimals of every irrational number. When we read those ciphers for the first time, they look like random numbers, moreover, their "randomness" is also confirmed with tests of (mathematical) probability theory. In the second and subsequent readings, however, their "non-coincidence" is revealed. There is no assumption here that this is all the truth about uncertainty, primarily because of the principled multiplicity that follows.

(Dis)Order

Question: Is there order in the disorder? Is it possible for some causality to occur from pure, absolute randomness?

Answer: Of course, it can. It is at the foundation of probability theory. At its very core is the idea, the principle (unspoken) that more likely events are more frequent outcomes. At the same time, it is also the basic principle of (my) "information theory", called "information minimalism". According to this principle, all states of nature spontaneously evolve into less informative ones.

In other words, this extended theory of information does not go beyond the understanding that more probable events are less informative, otherwise recognized within the classical theory.

The consequences of that "principled minimalism" are enormous. For example, the Information Perceptions, which starts from the coupling of subject and object as a unit of perception (S = ax + by + cz + ...), will give a larger amount if the factors in the additions are arranged so that the larger of one (subject) multiplies the larger of the other (object), that is, it will give a smaller amount when the larger factors of one multiply the smaller of the other. These minimums are truly unconditionally achieved by dead physical objects, which never give up of the least action, while living beings are characterized by additional possibilities of choosing, let's say, defying that "natural course of things". Therefore, defiance, freedom, excess of options and vitality go "hand in hand" in my theory of information.

Like "rebellious" storms, all of whose particles without exception obey the “principle of least action” of physics, or geysers that may seem to us as if the universal force of gravity does not apply to them, this theory sees the appearing of life. In this sense, it sees disorder in order and vice versa, it sees order in disorder.

Vitality, therefore, is that excess of information characteristic of living beings as opposed to their building inanimate substance. They, all together, are subject to the minimalism of information in an effort to get rid of all possible excesses. But there is the law of conservation of information and also the principal thing of this theory, that information is the fabric of space, time and matter, and that uncertainty is its essence. In other words, that the environment is equally filled with "redundancies" that it would also like to get rid of.

That is why interruptions of "life", i.e. occurrences of "death", although expected like the cessation of storms, can be delayed for an unexpectedly long time. However, the traces of those efforts, life to pass into death, can be seen on all sides — if we look carefully. These are desires for inaction, laziness, surrendering to the "natural flow of things", but also giving one's freedom to the collective for security and various team adjustments for efficiency, emergence. Let's note that birds that fly harmoniously in a flock individually have less freedom. Likewise, it is evident that each of the similar aspirations would move us towards a state of less total information.

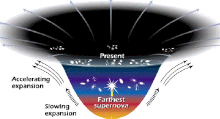

The struggle for freedom and escape from it, otherwise topics of psychology and social sciences, thus become subjects of information theory. The roots of those processes are in the distant past from the time of the origin of the cosmos, it is believed, about 13.8 billion years ago. From the hot congealed mush, the universe gradually and coolingly developed into a vast cold and sparsely populated expanse. The entropy of its substance grew, the information of the parts decreased to the detriment of increasing complexity, multiplying independence, precision, but also the accumulation of "memories" in space.

Adaptation

Question: Can you clarify for me what you mean by “adaptation” in the information theory you are researching?

Answer: Ok. I assume you know that the probability, p ∈ (0,1), is a positive number not greater than one, the greater the event it represents is more certain. An impossible event has zero probability (p = 0), and a certain event has unit probability (p = 1).

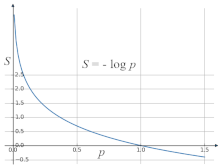

Information according to Hartley (1928) decreases logarithmically with probability, S = - log p (a negative that logarithm is a positive number), as in the picture on the right. Intuitively, more likely events are expected to be less informative. When we know that something is going to happen and it happens, then we don't get some big news. That's why we say “a man bit a dog” is more news than “a dog bit a man”, because the first is less expected.

The third term we need is "mean value". When we work with equally likely numbers it is the "arithmetic mean"; half of the sum of two numbers, a third of the sum of three and, in general, the nth part of the sum of n equally likely numbers, (a1 + ... + an)/n. When we work with numbers whose selection represents some probability distribution (p1 + ... + pn = 1), then to that mean value more contribute the more frequent, more likely numbers, so it is fairer to include more of them, and we arrive to the general expression p1a1 + ... + pnan.

Applied to a series of Hartley probability distribution information, the mean is S = - p1 log p1 - ... - pn log pn, which is Shannon's (1948) definition of the mean information of a probability distribution. This is minimal in terms of perception information, considering that in summations, higher probabilities (pk) are multiplied with smaller information (- log pk) as well as vice versa, smaller with larger ones.

In the attachment Emergence II (Theorem) it is proved that the mean value by one probability distribution (pk) on the information of the other (Ik = - log qk) gives the smallest value (Σk pkIk) if and only if the two distributions are equal (pk = qk for all k = 1, ..., n). That minimum information value of the "system" average is, therefore, precisely Shannon's.

Well, equipped with this knowledge, we can face the answer to the question posed to me. Minimalism of information, i.e. maximalism of probability, will make systems tend to develop into less informative ones, which are the processes of "adaptation". The request to a good fit is like looking for the right "key to a lock." More precisely, the subject-object couplings or similar occur, where the better integrated ones "win" in the "competition" in which everything possible naturally seeks a smaller state of information, say less involvement of its own action.

A better adaptation is the descent of the "number of options" into lower informative states, that is, greater peace, greater efficiency, more directed, more organized, or if it is about the content of the text, into more meaningful ones. Reason, in order to give birth to some "strong" thought, would have to previously possess excess information that it will reduce, adapt, just as obtaining useful work from lowering a load is preceded by its lifting. Dead matter does not have such a level of "reason" and as an object is without excess (potential) energy.

Extremes

Question: Are there extreme information values of given structures and what can we know about them?

Answer: The maximum information values of given more well-known structures exist and have already been mentioned above in the multiplication of sequences. Their sums are formed by the joining of larger components of one with the larger of the other and smaller with smaller ones, which is an "unnatural" situation for pure physics and thus characteristic for vitality. However, both of them strive for less information, so we can consider the maximum states unstable, overstressed.

Let's look at several ways of proving such a proposition. We know that more probable events are more frequent outcomes, but it is not known that such "spontaneity" of higher probabilities can also be understood as "uncertainty force ". It is the first obstacle to equal opportunities, from which various diversities follow, from which it is often difficult to recognize the initial cause.

Intuitively, we know how to provide the competitors by equality at the beginning of the battle, so that the fights are fair, but also to make them fiercer, livelier. Again, the strategy or tactics "tit-for-tat", which follows the opponent's actions with timely, sized, and sufficiently uncertain reactions, will have greater vitality, which is why it is the favorite strategy in competitions of mathematical game theory algorithms. These competitions are experimental confirmations of the theoretical position that combining the stronger with the stronger and the weaker with the weaker incentives gives more intensity to the game.

An example of stratification of equality is free networks (Stratification). Wanting peer-to-peer "communication" we will have a network of sparse nodes with many connections and many nodes with sparse connections. Such is the result of increasing the efficiency of the network (communication), or reducing the flow of information, or it is simply a matter of higher chances that newer links will be added to a node that already has more of them (even likely).

It is a mathematical theory of networks, so it has many applications where the efficiency of peer-to-peer flows of "something" (anything) is concerned. An example is the flow of money, goods and services (connections) between owners (nodes) in the free market; they spontaneously develop into rare super-rich owners and numerous relatively poor ones. A similar example is the acquaintances that people freely make with each other, which develop into individuals with many acquaintances and many significantly more lonely individuals.

We also have a lot of classical information theory propositions that (covertly) talk about this same phenomenon. Such are the "Two Theorems" (Information Theory III, title 81.2.), say, which establish that numerous chances are always reduced to a few significant ones and masses of others. The evidence is almost everywhere around us; we just need to notice it.

At the same time, an interesting question is what happens to the whole system whose parts lose information in these ways, considering the law of conservation. I have also discussed this in the aforementioned scripts, formally and burdened with formulas, that it makes sense to use "trivial" examples here. The decline of the information of parts of the system is compensated by the appearance of new independent additions to that system, while the total information remains constant. Examples are the evolution of past civilizations towards maturity and their bursting at the seams, disintegration and disappearance (Democracy).

Here we are dealing with quantities, so in the way of physics it is possible to discuss the other extreme, the minima of information. In writing about Emmy Noether, and before of course, I noticed that her conclusion about the presence of "immutability" means some symmetry, and geometrically we would say isometry, in physics represents the conservation of the corresponding physical quantity. That's how she (1915) interpreted partial differential equations of the second order, which were discovered less than two centuries before by Euler and Lagrange (1750-1788) by establishing the "principle of least action" of physics.

Conservation of quantity, however, also speaks of finite divisibility. Because we can well define infinity precisely so that it is a proper subset of itself, just as even numbers are a proper subset of all natural numbers and both are infinite sets, that would be an impossible infinite divisibility of the size for which the law of conservation applies, both in physics and in informatics. Hence, also my, (hypo)thesis on the equivalence of information and action (quanta). That is why we have proofs of theorems in steps (indivisible packages), legal paragraphs as well, communication and knowledge in general.

The specificity of the term "information" in such discussions would be its essence in uncertainty. Namely, when the information system is broken down into elements, "pure uncertainty" occurs. Behind such, by possible further subtraction, there would be its negation, an ever-increasing certainty. This, apparently very bold, assumption has a foothold in the treatment of states and processes of quantum mechanics by means of linear operators (or matrices). We know that it is possible, even in different ways, to separate each such factor into the corresponding factors. But about that another time.

Outside this band, from minimum to maximum, I believe that information still makes sense to investigate, but it is no longer the subject of classical physics there. It extends even further into the field of the mathematics of infinity. For example, if probability p ➝ 0, then information H = - log p ➝ ∞. On the other hand, when the number of options N falls below one, if this assumption is allowed, then again H = log N ➝ ∞.

Attraction

Question: What are the “uncertainty forces”, can you explain them to me without formulas?

Answer: I will try. We know that the more likely outcomes are more common, but let's now look at it as a "force", moreover, compare it to real, physical forces. This is exactly what I wrote about in the book "Minimalism of Information" (2.5 Einstein’s general equations) following the proof of relativistic gravity by the principle of least actions.

It is surprising that these are the same equations discovered by Einstein (1916) in an apparently quite different way, but again it will not be surprising since (my) information theory predicts that action and information are equivalent. It is at the same time another confirmation of the existence of principled minimalism of information.

As a centrifugal and centripetal force, actions and reactions in general, this attractive "gravitational force" has its counterpart "uncertainty force", which I recently tested statistically (Informatics Theory I, II, III). Let's imagine a sequence (n = 1, 2, 3, ...) of random events, experiments that we repeat (M = 1, 2, 3, ...) many times. When the sequence event is realized, we increase the number of its realizations by one and keep the results in another sequence of numbers that we call (n dimensional) vector.

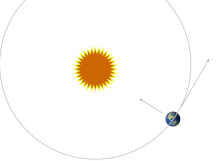

No matter how many (n) dimensions they have, two such vectors span one plane, one surface that we are calculating. Such spaces are abstract, metric. I borrow them from mathematical analysis, and on the other hand I take forms of physics starting with Kepler (17th century), and was not surprised that the mentioned strings, vectors, in equal "times" (steps of the number of trials) cover equal surfaces — just as in the case of the planets in their journey around the sun, and that the "vectors" draw (statistically accurate enough) hyperbola paths.

In the script Prilozi (Central motion) you will find evidence that a constant central force drives charges along conics (ellipses, parabolas, hyperbolas), regardless of its strength. Hyperboles are in the case of repulsive forces, as well as "uncertainty forces". The strength of these constant forces is determined by the distribution parameters (expectations, dispersions), which can be different. We know that "constant central forces", speaking in general about the forces that we can get from the equations of theoretical physics, decrease with the square of the distance (like electric or gravitational) if their action spreads at the speed of light. Abstract forces of uncertainty also achieve this, when we look at the disturbances caused by them physically.

In addition to "experimental" confirmations by generating random numbers and further computer processing of the data, the expectation of the "vectors" found, this phenomenon can be proved by "pure" probability theory.

For example, starting from the Chebyshev inequality for probabilities (Informatička Teorija I, 26. Disperzije)

where X is a random variable taken so that its mean value is zero, σ2 is the variance (dispersion) of its distribution, r > 0 is an arbitrary number. It says that the probability that the random variable takes values greater than a given number (r) decreases with the square of that number. In other words, that the limits of reaching "randomness" decrease with the square of the distance. Other analogies of "abstract" probability spaces with "real" physical forces follow from this.

Riddle

Question: What exactly is that “information”; you say that it is the fabric of everything and its essence is uncertainty?

Answer: That definition of it is too "materialistic" and I use it only for the wider masses. I recommend more appropriate work with it: information is what you get when you don't have it, and you don't have it when you have it. Mathematics digests such, as well as the "tissue" of logic and physics. I admit that I do not have the talent to convey the best abstractions to non-mathematicians, so I bend over and easily soil the beauty.

Quantum physics is full of primordial information that we rarely see in the macro world. For example, the experiment “double slit”, only at the beginning of the 20th century, for the first time announced the absurdity of the structure of the world of old physics and the enigmatic nature of information, that by looking at the process (experiment) we get one thing, and not looking at the process — something different. It is such a "tissue" of the cosmos.

Physically, the information is still partly "substantial" thanks to the law of conservation, it seems, but this is deceptive. Chasing things like that, we will quickly run aground. We would not be able to fully understand "uncertainty", its physical force that comes from "nothing", which if we manage to "feel" it is certainly not. What the subject (person, object, process) has perceived is certainty. Like the state of the dice after throwing, when all the uncertainty has melted into one of the outcomes, keeping the same information during the realization.

If information were really a "thing", with a deeper essence that is "corporeal", the stories about annihilations and the creation of particles would remain without a final resolution, waiting for further meaning that is not on the way. The miracle by which a particle, not caring about anything "in itself" but only in the amount of spin, energy, momentum, becomes something "essentially" different — it would seduce us. On the contrary, mathematics has an understanding of abstractions since the time of Euclid and does not need physicality to understand regularity.

So, when you are dealing with informatics, try to stick to a logical structure rather than a building structure, so we will bring the points of view closer together. I'm not saying that will be enough for any of us to reach the end of its magic.

Annihilation

Question: Can you tell me something about particle annihilation physics?

Answer: Not much, nothing new (LHC), except for a few guesses. It is known, for now, that every particle has a corresponding antiparticle, and that some are antiparticles of themselves (e.g. photon, Z particle, Higgs boson). The universality of this phenomenon, consistent with TI's statements, points to the informatic effects.

There is no negative energy state for bosons, so it follows that they do not have antiparticles, i.e. they are their own antiparticles. Every time a boson has a negative squared mass, a phase shift occurs, a change in the properties of the vacuum, so that eventually the particle gains a positive mass (this is the Higgs mechanism in the Standard Model of particle physics).

According to this bosons are slightly more stable than fermions. I hope it also means something small, remotely less likely to turn bosons into fermions than the other way around. Speaking in general, about the growth of the entropy of the substance of the cosmos so the reduction of its information and the melting of matter into space in order to maintain the total amount of information by increasing the thickness of the memory of the immediate past, we get another confirmation of my earlier (hypo)thesis. Space behaves more like "its own" with bosons, and this in itself justifies classifying bosons as elements of space.

A negatively charged electron is a fermion, and has an antiparticle called an "anti-electron" or "positron." By this feature it is similar to the much heavier and more complex proton. This is true for most known particles: a muon has an antiparticle called an anti-muon; the up quark has an up antiquark; the antiparticle of a positively charged W has a negatively charged W particle.

However, if we bring a particle and an antiparticle together, almost all of their properties cancel out. The electric charge of the muon (the heavy cousin of the electron) cancels out with the electric charge of the anti-muon. The first is negative while the second is equally positive. It's not just their mass and energy that cancels out. Mass is "conserved" energy, so we are actually talking only about the change in energy (ΔE) and the duration of the change (Δt), that is, the action (Δ E⋅Δt). Physical action is equivalent to information (in my theory).

The only things that remain certain in these processes are energy and time. Seen in constant time intervals, energy is conserved. It neither arises nor disappears in total quantity. However much you start with, you will end up with the same amount. This is because the phenomenon of the energy is actually a matter of information. The amount of outcomes of random events defines the speed of the passage of time, and energy changes during realization, i.e. information. Therefore, everything else (such as charge, momentum and spin) needs to be explained by, for example, principled information minimalism, or the whole idea of "information theory" hangs.

The significance of the (new) "informatics" interpretation of the structure of the physical universe is enhanced by the possibilities of "essentially different" particles to transform into each other. For example, an electron and an anti-electron can become a muon and an anti-muon when they have a large enough amount of energy, when they collide directly. Otherwise not, if the electron and positron are initially at rest.

As for my further "guessing" in the processes of annihilation, they are below the quality of these, I think, so I would keep them to myself for now.

Mass

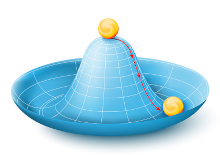

Question: You have an explanation for the origin of boson mass that differs from the Higgs mechanism?

Answer: Yes, it looks like, but only from a starting point of view. The Higgs Mechanism of the Standard Model of particle physics explains how gauge bosons gain mass. The relativistic model of that phenomenon was independently developed in 1964 by three groups of physicists, one of which was Peter Higgs. Using this mechanism, physicists Weinberg and Salam found the electroweak theory (unified electromagnetic and weak force), which in 1973 resulted in the modern version of the Standard Model of particle theory. It was not until October 2013 that the Higgs boson was finally observed at CERN's LHC (Large Hadron Collider), thus finally confirming the mechanism.

The picture on the left represents a symmetric but unstable state with the upper particle in the condensed energies of the universe at the very beginning of the "Big Bang", about 13.8 billion years ago. The bottom particle is in a stable but asymmetric state of lower energy, created by the dilution of the previous one by the expansion of space.

The beginnings of this story are dealt with by (my) theory of information, and the sequels are mostly acknowledged. Information is the outcome of uncertainty, a short-term change, that is, a combination of physical expression (energy) and duration. In principle, she would like it to be absent (minimalism) and not changed (conservation), which are actually forms of one and the same thing — her sluggishness.

In the beginning, we have emphasized the dense energy of the space of the "Big Bang", from our point of view today, and the increased possibility of "unwanted outcomes", those emissions of information, which would now be rare, and the relative acceleration of the flow of time. In that mess, the stretching of space-time, by increasing the diameter of the universe and slowing down time, events are thinned out. Information per event declines, but new independent — thus maintaining the overall "amount of uncertainty".

By the way, this is an addition to the explanation of the separation of the electromagnetic and weak force from the electroweak, and the appearance of asymmetry arises from a spontaneous escape from equality. A set of equally probable outcomes is a total of more information than differently probable such elements, and among the resulting differences there are places with their own duration. The only particles known to us today without their own (proper) time are those that move at the speed of light. They owe their duration, more precisely their movement in space and time, to other subjects. Looking at them, simply standing in the most likely places for them, other subjects see them in motion.

Those other entities, which have their own flow of time, have mass. It is absurd that we call such a "resting mass", when in fact it gives them the right to "own movement". The appearance of time that goes with the "mass" spreads not only in depth as "the past", but also in the widths of other temporal "dimensions", and hence even greater sluggishness.

Namely, mass physical objects extend through space-time. Those with magnitude and duration are, by definition, in different "places" and at different "times". For example, by the time the signal reaches the end of the body, say from our feet to our head, some time passes, which means that the body does not exist as a whole in one present. Thus, a "mass" phenomenon becomes a "composition" of information that slows itself down in motion.

Having one's own time, due to the very duration and movement, results in slowing down. It accumulates information and then, due to minimalism, it continues again with further expansions into space-time dimensions. Objects of ever-increasing mass are more and more attractive to others, due to the universal spontaneous desire for less information, with a spreading spatial and temporal influence. This means that mass, if we are talking about gravity, can act through layers of the past or layers of "side" dimensions of time.

I assume that this influence of gravity through time is formally equal to the known spatial one, but due to the relatively high speed of light (c = 300,000 km/s) compared to CGS (centimeter, gram, second) or some another system of physical measures suitable for us, it should be negligible. Due to the travel of the present to the future at the speed of light, and that light at rest, we represent the units of "length of time" with the duration until the light travels a certain distance (x = ict) for a given time (t). At the same time, these paths are imaginary (i2 = -1) and become real only by squaring, which further reduces the importance of the effect of gravity thought the layers of the time.

I believe that the effects of this action, of gravity through time, are also confirmed by the long ago noticed movement of the perihelion of the ellipse of Mercury on its journey around the Sun (Le Verriere, 1846), the later discovered general theory of relativity (Einstein, 2016) establishing the curvature of space-time by gravity, i.e. its pull for mass, and the still "unexplained" dark matter. But that's another topic.

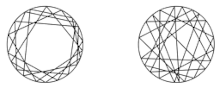

Еrgodicity

Question: Can you tell me something about "ergodicity"?

Answer: In mathematics, ergodicity of motion is the assumption that a point, whether of a dynamical system or a stochastic process, will pass close enough to every point in a given space after a long enough time. In the figure, the left circle has a non-ergodic trajectory, and the right circle has an ergodic path. Equivalently, a sufficiently large set of random samples of a process can represent the average statistical properties of the entire process.

Ergodic systems include a common-sense, everyday understanding of randomness, such that smoke might fill an entire room, or that a block of metal would be at the same temperature after a while, or that a fair coin would land on either side about half the time.

In "information theory", where "objective randomness" is understood in the above way, additional ergodicity would mean that every outcome has even a tiny possibility of realization. Such an assumption would have enormous consequences. For example, in the script "Information Theory II" it is proved (61.2. The Ergodic Theorem) that processes in which (almost all) outcomes have some chance, after enough repetitions take on forms that do not depend on the initial state. The processes themselves become such "black boxes" even when some outcomes are forbidden in the steps (script example 3).

The evolution of life on Earth will thus, after a long enough time, have incredible forms considering its beginnings. After very many (13.8 billion) years of development of our universe, even if natural laws have not changed in the meantime, we cannot determine with certainty what was at the beginning. Moreover, although the information of the present is getting smaller, and therefore its memory is getting longer, the further into the past it is getting fainter and rarer. If the natural laws also (imperceptibly) change, this assumption of ergodicity would have as a consequence that some distant past of the universe is objectively inaccessible to us.

Equivalent to the ergodic processes themselves, similar interpretations apply to the information they convey. Message-carrying channels, such as recently telegraph or telephone cables, today optical, or wireless transmissions, are prone to errors. If there is even a small probability that each signal can go somewhere, if all such were the channel probabilities kij > 0, that the i-th signal go to j-th, then there is no too long memory even when it has very long history.

The cosmos that we "know", whose past we can "discover" and thus "reconstruct" with forensic and the best scientific methods, does not have to be what it really was. The older the more inaccurate, and it would be that the idea of the "Big Bang" will turn out to be a fallacy — I state in the subtitle "61.1. Easier examples" of the mentioned script, in case the ergodic hypothesis holds.

Eigenvalue

Question: What are "own values"?

Answer: Proper, own or characteristic value is the name from the algebra of linear operators (in Serbian), and eigenvalue in quantum physics texts in the world. The first is, of course, much older, broader and more abstract than the second.

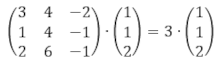

The picture on the left shows an example of an algebraic matrix (A) that acts on a vector (v) increasing its intensity three times and not changing its direction (A⋅v = 3⋅v). That's a general pattern, and matrices are one of the most popular substitutes, or representations, for linear operators. These act on the vectors, possibly changing their intensity, but not their direction, whereby the sum of the original will be copied into the sum of the copies.

Among the most popular interpretations of vectors are "oriented lengths" and "strings", but you should know that vector quantities are also polynomials, solutions of differential equations, forces, velocities, or physical states in general, and to make matters more interesting (typically more mathematically complex) vector quantities are and linear algebra operators, and therefore also physical processes.

Therefore, the eigenvalue of an operator tells us how many times it will increase (decrease) the corresponding eigenvector. That (own, proper vector) is special, because not every "process" will have the same effect on every "state", as in itself, characteristically. Processes during which the particle does not disintegrate into others, but remains of the type that was "own" to that particle, that is, it is "own" to those processes. Different eigenvalues can belong to the same linear operators, and with them corresponding different eigenvectors, and vice versa, the same vector can be eigenvalues of different operators.

Algebra theorems in this area from the beginning of the 20th century were recognized as the laws of quantum physics, and these findings became the most accurate thing that science has today. First of all, because the problem of the micro-world is otherwise barely accessible, and then it turns out to be "incomprehensible". In this sense, it is fortunate that we did not rely too much on intuition during those researches, because the discoveries would still be delayed by at least as much as some known results "we cannot understand" today.

Interpretations of linear operators are also "stochastic matrices", and these have already proven themselves in classical information theory to be good for descriptions of channels of message carriers. The analogy with physics processes also applies. Some environments transmit sound well, but not transmit any light. Furthermore, those that transmit light well will not transmit all wavelengths equally. And this is also the case with message carriers.

Algebra will tell us that the operator (transmitter) least changes its own vector (message) and much more. In the script Informatic Theory II, and partly in the other two trilogies, I dealt in detail with the theorems of “transmission channels” information. Among them, it is worth looking at the evidence. Let's say that a given channel causes less interference to the information it transmits the closer it is to its own (eigen, proper) channel, as well as a more accurate definition of the term "closer". In addition, you will see that the message "adapts" to the channel by transforming it into something closer to its own (proper) through the composition of the same transmitters.

This adaptation has an important negative consequence. A long chain of constant gears tends to be a "black box" — changing each initial message gradually into its own, making it impossible to recognize the incoming one based on the outgoing one. An example of this phenomenon is in the above description of ergodicity. The harmony of this with the blurring of things described there is a simple property of mathematics, that every exact theory agrees with every exact theory, but always with some peculiarities of its own.

The loss of the past there by the fading of events here is due to the noise of the canal. In addition, we see that, for example, the "evolution of species on earth" tends towards the "own" environment to which the species belong, and that with a change in the environment, evolution will also change its direction (another eigenvector has a different direction). On a cosmic scale, we are also evolving, and then we might just be fantasizing about the events of 14 billion years ago.

Question: Can a matrix be an "eigenvector" of a matrix?

Answer: Can. The only such one is of the form M = λI, where λ is some arbitrary but fixed scalar (number), and I is the unit matrix. Then M⋅M = λ⋅M, so the form of "eigenvalues" and thus the consequences are satisfied. Such a matrix (M) is a pure, optimal, one-way, the certain transformation of itself, in the sense that there are no "tails" of the past, or "obstructions" by noise.

We see the consequences of its "perfection" in the composition of such, which is the product Mn = λn⋅M for any n = 1, 2, 3, ...; as λn = e-αn for some number α. When λ ∈ (0,1) then λ can be a probability, so α > 0, and M can be a state of a physical system, or a process, but also the distribution of the random variable.

For example, a state can be a free particle (a solution to Schrödinger's equation) that goes on and on oscillating but mostly unchanged. The state (M) can also be the distribution of a random variable, when we get the agreement of this conclusion with "Theorem 1, part ii" subtitles "41. Limitations" of the script "Information Theory I", which establishes that for a given expectation, the maximum information is achieved by the exponential distribution. These are states or processes in which there is no "place" to memory, that is, they are in such a constant flow that future outcomes do not depend on previous ones.

Present

Question: Where does the information of the present go?

Answer: According to the previous one, when they can, they spontaneously go into the memory. The past is increasingly "thick", at the expense of the increasingly "thinner" present, so that the total information of "everything" remains constant. It could easily be added to modern cosmology.

The present is "thinned out" and by increasing the space itself (the universe is growing), not only by extending its memory. Information theory offers a (new) explanation to such an otherwise well-known observation by means of the "melting" of substance into space. This requires the reduction of the idea of "entropy" to the substance itself, with the addition that the increase in entropy follows the decrease in the information of the given system.

To all this we can add the "lateral expansion" of space-time into additional dimensions, for example based on the previously observed "leakage " of gravity somewhere (it is not known where), but also explanations of mass in the previous, informational way. This (new) way includes the interpretation of "dark matter", an important part (one should not underestimate multiplicity), because it could mean that at least gravity has some interaction with "parallel realities", which, I suppose, are important in the types of time dimensions like the past.

As for more complex forms of possessing information, as in "living beings", the principled thriftiness of information (minimalism) will not bypass them either. In their youth, they are hungry for information (novelties, differences, temptations) so that during their lives they go through a phase of maturity when, like a burden lifted to lower and perform useful work, they lose the information by living more meaningfully, fitting in and finally leaving the rest of the surplus to the "inanimate", growing old and dying.

I remind you once again, smarter speech, organization, or efficiency, are states of lower information that we could not achieve if we did not have it in excess before. This also includes lies, fantasies in general, as forms of "diluted truth", which are already attractive even though irresistible.

Regimen

Question: What mechanism do we manage natural phenomena?

Answer: I understood the question and I will continue in the answer. We (living beings in general) have this "vitality", an excess of freedom, i.e. information, which we can dispose of and use to defy the natural conditions of the least action, the minimalism. Still life then bows, or retreats before "our" force.

Using those "unnatural" choices, which are in their deep essence "forces of uncertainty", are additional tools that give us that little the advantage of becoming "rulers" of nature.

Let's consider this "mechanism" on the example of "perception information". From S = a1b1 + ... + anbn, where the subject reacts with strength ak to the action bk, respectively for indices k = 1, ..., n, follows the maximum for pairing a larger with a larger and a smaller with a smaller factor, and a minimum in the extreme second case, when multiplying a smaller with a larger (and a larger with a smaller). When the subject (array ak) sticks to its values, nature (array bk) will yield, because she unconditionally adheres to the principle of least effect — well known in theoretical physics.

More specifically, let (3, 2, 1, -1, -2) be the sequence ak, and equal or proportional to it is the sequence b k, so the perception information will be maxi/minimum:

By not changing the coefficients (intensities) of the subject and the object, but only their order (redistributing the powers), the perception information can be changed from minimum to maximum, Smin ≤ S ≤ Smax.

When the subject defies the order of Smax, because he can and wants to, if he has "vitality" and an excess of "amount of uncertainty", then inanimate matter will go towards the order that gives the value of Smin because it follows trajectories of least effect (Information Stories, 1.5 Life). Theoretical physics today derives them all from the Euler-Lagrange equations, which are based on that principle.

Similarly, we conclude that the more vital one wins the game with the "smart fightback" strategy (Win Lose), if he wants to. Using it, he is persistent, timely, measured and sufficiently unpredictable in his reactions to the actions against his opponents, which is otherwise the strongest strategy known today in "games" for victory that resemble those in economics, politics, or war.

Illusion

Question: So, what we decipher as the "past" of the universe, the "older" the more unreliable is and the more fantasy?

Answer: It was somehow intuitively recognizable. But information theory will also add novelty. Take for example this previously known theorem in the title "53. Figure Dispersion" of the script "Informatics Theory II".

The theorem talks about the state of the sent message, i.e. the distribution of its probabilities during multiple transmissions through permanent channels, p ➟ p' ➟ p'', in which the longer the channel, the longer the transmission duration, it says, the output information is greater!

We usually think that annoyance in the transmission channel (telegraph, telephone wire, fiber optic cable, wireless) destroys the message by robbing it of content and information. However, this theorem states the opposite, that the content will "disappear" by oversaturating the message with noise.

We know that sometimes we need to turn off the light to see better, to wait for the night for the stars to appear. Likewise, when the digital display shows the number "eight", then all three horizontal and all four vertical dashes are lit, hiding the records of all ten digits, from zero to nine. It will actually interfere with the information channel. They will overload it with noise, misinforming the output message with surpluses and not shortages of content.

In the script "Information Theory I", in the section "08. Channel Adaptation", you will find reasons for narrowing the range of probability distributions in transfer processes, which means averaging them around a uniform distribution. This, by the way, has the maximum information, and in addition, there is also an "inherent" (eigen) distribution of channels. Each input weighs to it, which is why long channels become "black boxes" (we cannot know the input based on the output).

Consistent with this, what we see as the higher temperature of the older cosmos, the cooling universe, the increasing entropy, that is, the information of the ongoing present as it decreases — we can formally interpret it as something that reaches us together with transmission interference (channel noise). Thus, it becomes a dubious thesis that needs to be re-examined, and in the last case, which is a wall, a barrier that cannot be crossed as well as "objective chance", for example, wanting to predict which number will fall by throwing a dice.

This is the specificity of the "information theory" (mine), that illusion is also a type of reality and that its falsity can converge towards truth. Namely, if errors in the transmission of information about some ancient state of the universe could be exposed, seen through, then it would not be sufficiently hidden by a black box. It is analogous to the discovery of a formula, an algorithm by which we could calculate, predict the number that will fall after throwing a die.

Unlike untruth, which will sometimes lead us into contradiction, truth will never betray us like that. This is what we know about lies, and in addition to the recent discovery of their attraction and ability to make an equivalent "universe" to the true one (The Truth), let us now add that in the limit sometimes a lie can become the (real) truth.

|