February 2022 (Original ≽)

Entropy »

Question: What is entropy in the information theory?

Answer: First of all, we need to understand here that the entropy of classical information theory is (still) valid in physics, although in some accepted papers it is treated the opposite in relation to my way (Information).

For example, Landauer in 1961. He pointed out that the annulment of information is a wasteful process, because deleting an entry in a molecule at a position of thermodynamic arrangement will change the entropy of the arrangement. If this process takes place at a given temperature, the product of temperature and the change in entropy is Work, so Landauer concluded that someone has to pay an energy bill to change the information. Quote from my article "Quantum calculus".

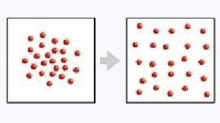

In the mentioned example, let's just reverse Landauer's positions and determine that someone has to pay an energy bill to produce information, and here we are in the new information theory I'm talking about. In the otherwise valid image (top right) of the spontaneous growth of entropy, the molecules are shown spontaneously occupying uniform distributions in the vessel. That such an arrangement is more probable than accumulating in one box is proved in "Information of Perception" (3.4 Entropy), and Boltzmann's entropy is also about pushing molecules due to vibration and their incompressibility.

So, assuming that the molecules are already in a more probable state and that W has them, then the state information is S = kB log W. This is the entropy, with Boltzmann constant kB ≈ 1.38 × 10-23 J⋅K-1 (joules per kelvin), and natural logarithm. Consistent with this, the gas expands spontaneously, pushing and occupying more probable states, the latter simply due to the more frequent realization of more probable outcomes.

As the more probable outcomes are less informative (there is more news that a man has bitten a dog than that a dog has bitten a man), it would be consistently said that information decreases with increasing entropy. We are talking about increasing the number of microstate layouts in a given macrostate, which, for now, classical physics claims the opposite.

We have partly understood the agreement of Boltzmann's statistical interpretation of entropy with my informatically, I hope, and not far from that are the descriptions at the molecular level. Warmer gas molecules oscillate harder, their vibrations are more complex, less predictable, they carry more information within them. Their intensity, by the way, can overcome the probability of deployment. By cooling the gas, the entropy increases, the oscillations of the molecules calm down and, now let's add, their information decreases. That addition, the final conclusion, does not correspond to the classic one.

Unlike classical physics, which would say that higher entropy goes with more information, my information theory holds that with increasing entropy (given system) information decreases.

The Gibbs paradox in my information theory ceases to be paradoxical as it used to be. Let me remind you, Gibbs (1875) imagined a gas vessel that can be divided in the middle. When the barrier is removed and returned, the entropy calculations does not give good results, except in the case of indistinguishable gas, compressible like a bosonic,, which was not known in his time. I further simplified this in one recent response (Anomaly).

Recognizing the value of the Gibbs paradox, we find that there is no spontaneous increase (non-spontaneous decrease) in the entropy, say such the boson gas, i.e. that the known law of "spontaneous growth of entropy" applies only to fermions. The principle of probability (more probable outcomes are more common), otherwise my neologism, as well as the corresponding "principle of least communication" (development of the situation is spontaneous towards less information), or the long-known principle of least action, law of inertia and other equivalents, differ from entropy.

The entropy of a substance grows spontaneously, but not the entropy of space (force fields). The substance of the universe spontaneously "melts", its information "leaks" into the space that is therefore larger and larger. Consistent with this, the decay of fermions into bosons is statistically at least a little more likely than vice versa, and the magnitude of this "little" defines the rate of space growth, hence the speed of light, the intensity of the past to the present and much more. And then when.

Let’s conclude this conversation by stating that my theory of information will not have so many objections to Clausius's treatment of entropy (1850) in his calculations of the Carnot Cycle processes. I hope we agree on that.

Disorder »

Question: Is entropy a measure of order or disorder?

Answer: To the previous (Entropy) this comes as a real dilemma for the (new) information theory, noting that such questions now have an error in the setting.

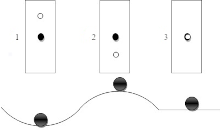

Spontaneous flows of entropy, as well as calming processes, tend towards balance. But, for the "universe of uncertainty", calm is not where there is more disorder, if we consider such situations with excess options and unpredictability, but on the contrary where there is less information, with more order and certainty, or rules.

Of the three states of equilibrium, as we learned in elementary school, in the picture above left (1. stable, 2. labile, 3. indifferent), the optimal would be stable, and the worst labile. The impossibility of reaching the optimum in a given situation confuses us, because we believe that "inaction" is an absolute stillness. However, such a thing does not really exist, it cannot exist in the "universe of information", and the unstable state is directed towards the stable.

Consistent with the "information theory" would be to say that entropy grows spontaneously in order! Striving to even out their layouts inside the vessel, the gas particles behave like soldiers at a parade who, uniformed and neatly lined up in rows and columns, are less "radiant" with uniqueness. Their personal information is muted and that is why their entropy is higher because their communication is lower.

The classical interpretation fails to overcome the finding that in a given macrostate a larger number of microstates W achieves a higher probability, from which, together with pushing, follows Boltzmann entropy S = kB log W, with the also accurate statement that the probability of a single element of a more probable microstate becomes lower. This is a deeper cause of the misuse of the word "disorder" to describe the development of entropy, which is followed by examples such as a glass that would fall and break, adding the job of cleaning.

The heart that flew on the floor has less total information than the whole glass, just as isolated individuals (ants) have less aggregate vitality than those in the collective (Emergence) – is an objection from the information theory.

Worship »

Question: Does that mean that to worship the Disorder is not what psychology thinks today it is?

Answer: Yes and no. Contemporary psychology does not know (my) theory information and is not able to include spontaneous flows of reducing "options quantity" in their considerations. It does not recognize the law of conservation that quantity, nor life as its excess.

However, these significant accessories (stinginess of the information with the law of conservation and possession of its surplus in living beings) will be places to change some attitudes of modern psychology. Worship of death, say, if it implies a "disorder" as in official psychology, will not be in line with the above clarification of entropy.

The disorder is neither a sure sign of decay and death, which we see in small children who prefer scattered objects and toys in the room than order, and we agree that they are not representatives of dying. The arrangement on the table is more often a message of routine than creativity, responsibilities rather than intelligence that would be easier to wore with "excess option" (unpredictability). The decline in the ability of action (communications) will show a smaller interest towards excess complications, and higher towards established behavior.

Death worship can be subverted under the attraction of "dark tourism", travel to locations related to death, accommodation and suffering (Death as attraction). Tourism on the places that attract people because of the interconnection with death and which could inflict psychological scars, in the theory of information would be a consequence of the principled minimum information. This comes from spontaneous, mild but persistent aspirations of nature according to the development of less communicative conditions, say due to more common ones of those actually less informative.

Imbalance »

Question: When does entropy decrease?

Answer: With the growth of information, energy, additional work that disturbs the thermal balance of the substance, for example.

Imagine a longer pool, a bathtub, and maybe a smaller vessel full of water of uniform temperature, as we start heating one end and cooling the other. The entropy of the liquid will begin to fall and, after the cessation of heating and cooling, the heat of the water will spread to the colder part again until it is equalized by the process of increasing the entropy to the optimal value.

Entropy is a property of a substance, or at least it is its law of spontaneous growth (Entropy). The property comes from Pauli's exclusion principle, for fermions, which says that no two identical fermions can be in the same quantum state simultaneously. All particles of physics are always waves, oscillations, or vibrations, which can be further supplemented by Boltzmann's statistical definitions of heat, then temperatures and descriptions from which the mentioned property of entropy is derived.

However, when there is no sense in moving and pushing particles to take their more even and comfortable position in the crowd, which is the case with bosons (for which the Pauli principle does not apply) which can be more equal at the same position, there is no law on spontaneous entropy growth. But then the principle of minimalism of information remains, which after its discovery can be recognized in various forms, for example as the most common event of the most probable outcome, the law of inertia, or easier spreading of half-truths than truth through the news media.

Entropy is one of the peculiarities of the "information world", a substantial phenomenon that could behave extremely "silly" outside its domain, if we tried to spread it where it does not belong. I have already given such examples, in addition to the above description of entropy is the melting of matter into space, or earlier the flow towards the efficiency of free networks (Democracy). They would be "proof" of the violation of the law of conservation, then, an example of a spontaneous decline of entropy (development of inequality), when entropy could be generalized endlessly.

As nature does not like equality, it actually suffers the spontaneous expansion of entropy, when entropy appears as a stronger force. But there are situations where the "force" of the principle of minimalism of information still defeats entropy. I have mentioned one that could be recognized after accepting the objectivity of uncertainty (Dimensions). The situation arises around the body of large masses in strong gravitational fields whose substance is partly in other temporal dimensions, which are invisible to the relative observer.

Entropy is an extensive quantity, which means that it grows with the size of the body, but in the presence of a source of strong gravity it will be smaller than the one outside the field. I emphasize once again, the Sun does not attract the Earth with its stronger entropy, but on the contrary, with the lower entropy of its environment, it keeps the planet in orbit.

Relativity »

Question: What do you think about relativistic entropy?

Answer: This is an old and still always speculative topic (Information & Inertia) since the appearance of the theory of relativity. We could now approach it with the main reason in the above answer (Imbalance).

By adding energy to the body, to which the coordinate system K' is associated, we do the initial work and the system start traveling. Thus, the body will move from our state of rest, system K, to uniform rectilinear motion at some speed v. According to the above, the relative entropy of the body (which we now observe) will be less than its proper (in resting state).

The initial assumption that working on the body disturbs "balance", its homogeneity or isotropy, and reduces entropy, should be a new reinforcement of the topic of "relativistic entropy". We come again to the conclusion about the relative entropy less than its proper (own), we open the question of how much it could be smaller and what else we can draw from the same observation of the system K from the point of view of the moving system.

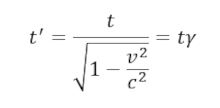

The isotropy (equality in all directions) of the moving system is disturbed by the relative contraction of units of length (ℓ' = ℓ/γ) which occurs only along the direction of motion. The relative energy (E' = Eγ) and the dilatation of time (t' = tγ) will be in the same proportion, inversely shortening the lengths. Quants of energy will be unchanged (Eτ = h), because the more times the relative energy is, the slower the flow of time (τ' = τ/γ).

The information received by the body consumed from such a relativistic account is not visible, but we now know (Dimensions) that this body has partly gone into a parallel reality and is so invisible to the relative observer. Due to the relativity of motion, each of the observers from K and K' sees the other with less entropy. That is why there are no transitions of bodies from their own states of rest into relative movements "without the action of some other bodies or forces", because entropy increases spontaneously.

Question: Newton's law of inertia and Boltzmann's statistics seem to fit into such an interpretation, but what about heat and temperature?

Answer: This is a slightly more complex problem and it has several equally promising solutions for now. I will describe only the one from the link above and another similar article (The Nature of Time). There are details, and in short, I use Clausius' definition of entropy, S = Q/T, as the quotient of heat (Q) and temperature (T).

In order to have a relatively lower entropy (S' = S/γ) in the scale which shortens the units of length in the direction of movement, i.e. which slows down the relative time or increases the relative energy, we assume that the increase in body temperature is proportional to the increase in energy but the change in heat omit.

We reduce the explanation of the temperature rise to a relativistic Doppler effect, whose transverse increase in wavelength is equal to the mean (half-sum) of the wavelengths of the incoming and outgoing source, and that (λ' = λγ) is proportional to the increase in energy that is, exactly the "redshift" that corresponds to the increase in temperature (T' = Tγ).

As we know, the relativistic increase in energy comes as the kinetic energy of a moving body,

Question: It seems pretty tuned, and how realistic is it all?

Answer: Who knows. For now, that seems like a multi-solution task. Thus, when a moving body hits an obstacle and stops, its entropy increases (the glass breaks), the higher temperature in motion returns to less rest, and part of the kinetic energy turns into heat and maybe some others (electrical, chemical). There is no illogicality.

Also, from the second law of thermodynamics, when heat passes from a body higher to a neighboring body of lower temperature, when entropy increases, we see that temperature (denominator) decreases faster than heat (numerator) and otherwise, their changes do not have to be proportional.

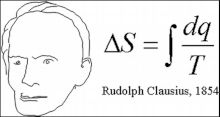

Clausius »

Question: How did entropy come about?

Answer: In the first 1850s, Rudolf Clausius developed a new concept of the thermodynamic system through the loss of small amounts of thermal energy δQ. As he developed his idea of energy losses in the Carnot cycle machines, he often came across one substitution, a fraction in formulas, which he called entropy.

The efficiency of the heat engine is a specific range of temperatures during operation, it is the difference between the highest (Thot) and lowest (Tcold) values in relation to the highest, η = (TH - TC )/TH. It is clear that this coefficient is less than one and the larger with the higher temperature range of the machine.

The ideal useful work is proportional to the coefficient of efficiency and, of course, the state of the highest possible thermal energy, W = η ⋅ QH. Hot and cold energy states, QH and QC, alternate cyclically from hot to cold, with real useful machine operation, W' = QH + QC. Here a positive number (transmitted energy) is taken for the first addition, and a negative number (taken energy) for the second.

Ideal useful work is greater than or equal to real, so we have:

Clausius called this quotient entropy (Greek: έντροπή — inward turn, shame), probably because of the reminder of the measure of the internal energy of the system, which is "shyly" stored and cannot be turned into useful work. He was a mathematician and did not try to spread the meaning of his "entropy". This was done by the successors of the idea, mostly physicists, but over time also other naturalists and even philosophers.

In information theory, there will be further revisions of the interpretation of the (correct) physical explanation of entropy – I believe – which concerns the information itself. In a given (thermodynamic) system, less entropy will be attributed less information, mostly. A colder system will be considered one that is deader, less vital, with fewer options, actions, with less freedom than a warmer one.

I repeat, official physics is still the opposite belief from (my) informatically. It holds that a given system in a state of higher entropy has more information, not less.

Maximization »

Question: Do bosons have entropy?

Answer: Yes, since the laws of probability apply to bosons, but No apropos the implications that are not the same as for fermions.

The picture shows a simulation of the decay of the Higgs boson into muons. The Atlas of CERN observed it in 2012, both confirming the theory of these particles themselves and the probabilities that govern them. In addition to the Higgs boson, the elementary boson is a photon, gluon, W/Z, and a hypothetical graviton.

The Principle of maximum entropy says that the probability distribution that best represents the current state of knowledge about the system is the one with the highest entropy. As more probable states are realized more often, the entropy of this principle is actually an expression of the "probability principle", so the corresponding finding is accompanied by bosons (A maximum entropy).

Imagine we have nine equal boxes in which we will put nine equal balls, in different ways. If the balls are statistically different (distinguishable), there is the least way to have all the balls in one box, and much more to have one ball in each box, 9 and 9! = 362 880. When each of the arrangements is equally probable, as the second number is 40,320 times larger than the first, that is why the chance of arranging in the second way is so many times greater.

That is why the gas in the room is diluted, because, among other things, the molecules receive more probable distributions following the principle of probability. The domain of "more likely", together with "pushing the incompressible", is the principle of (greater) entropy. In the case of indistinguishability of particles, not their positions, analogous to the example with balls and boxes, the number of first arrangements does not change, but the second are no longer "nine factorials" but only one, and the maximum probability is somewhere between the two.

Note that in such a calculation, the (in) distinguishability of particles is more important than the integer spin (definitions of fermions and bosons). For example, atoms and a coin can have an integer collective spin and in that sense be bosons, and be distinct. When tossing two coins, for example, it matters whether the first was a tail and the second a head, or vice versa. This "subtle" difference leads to a drastic difference in entropy flows.

Bose-Einstein Condensate is a group of atoms cooled almost to absolute zero. When they reach such a temperature, the atoms barely move one to another; they have almost no free energy for it. Such atoms begin to shrink and enter the same energy states. They physically become as if they are identical and the whole group behaves like one atom. The Pauli exclusion principle, which does not apply to bosonic particles, will not apply to it. These particles will be in exactly the same quantum state, and as a system they will exhibit macroscopic effects.

Moreover, due to the unique property of condensate, Lene Hau showed that light can be stopped or significantly slowed down to 17 m/s, which will lead to an extremely high refractive index.

As the explanation of the "Principle of maximum entropy" says that it can be chosen using Occam's razor, the "miracles" of the boson should consistently be treated as an application of the well-known theory of probability. A similar thing is achieved with "entropy information", which can be considered part of the general information theory. That is why I am not in the habit of calculating the properties of a boson gas using the "theory of entropy", ever then I start from the (general) theory of probability, but I understand that it is also a matter of agreement.

It is possible to talk about the "boson entropy" not in a thermodynamic way. Also, about the currents of spontaneous development of indistinguishable particle systems. Both are consequences of probability theory, but they have so different optimums that we do not have to comfortably consider them part of (the same) theory of entropy.

Counting »

Question: Can you explain to me the arrangement of the balls in the boxes? Why do you doubt Boltzmann's entropy?

Answer: We think about the entropy of indistinguishable then distinguishable balls and use the account of combinations and variations. When we count the first ones, the order is irrelevant, and with the second the order is relevant.

We aim to distinguish between the "entropy" of identical particles from the Boltzmann entropy of truly different ones (Maximization).

One ball and one box give the same result in both cases: there will be only one way of arranging.

Two balls and two boxes. Both in one are two ways for both species, and for one there is one combination (C22 = 1) and two permutations (2! = 1⋅2).

Three balls and three boxes. All three in one (C31 = 3) are three ways for both types. We choose two boxes of three again in three ways (C32 = 3), but each of these ways has two more combinations, 2-1 and 1-2, which is a total of 6 = 3⋅2 combinations, or six permutations AB-C , AC-B, BC-A, A-BC, A-CB, B-CA, which is a total of 18 = 3⋅6 variations. Then, each ball in one box has one combination and 3! = 1⋅2⋅3 = 6 permutations.

Four balls and four boxes. The combination for filling one, two, three and all four boxes has 4, 18, 12 and 1, however. The variation is in the same order of 4, 84, 36, 24. Check.

In general, let's have n balls and as many boxes. To have all the balls in just one box there are n ways in each of the cases, combinations and variations. Two of the n boxes can be separated in Cn2 = n(n-1)/2 ways, and in each of these balls we can arrange in n-1 ways combinations (without distinguishing the order), which multiplied by the separation of pairs gives a total of n(n-1)2/2 schedules.

It is a general formula for combinations, arranging n balls in as many boxes, and with exactly two boxes occupied by balls. Note that it agrees with the previously found numbers for n = 2, 3 and 4, when there are 1, 6 and 18 of these layouts.

When we arrange the same number of n balls and boxes in the way of variations (important order), we have two of the n boxes in n(n-1)/2 ways again, and in each of these 2n - 2 layouts. Namely, in the first of the two boxes there may be one of the possible n in Cn1 ways while in the second all the others, or two in Cn2 while in the other others, ..., or n-1 in Cnn-1 ways while in the second the remaining. How it is:

Note that this general number of variations agrees with the previous specific one for n = 2, 3 and 4, when we get 2, 18 and 84 respectively.

Finally, filling each box with one ball has one combination, and n! = 1⋅2⋅3⋅ ... ⋅n permutation (variation of all). This last number of combinations (1) is certainly not greater than the others given, and in the examples, we have seen some of the cases, first for n = 2 (2, 1), then for n = 3 (3, 6, 1), or for n = 4 (4, 18, 12, 1). However, even this last number of permutations does not have to be the largest of the given variations, as we had in examples n = 3 (3, 18, 6), or n = 4 (4, 84, 36, 24).

Therefore, the only "force" that uniformly distributes gas molecules in Boltzmann's room is not probability, but next to it there is the "pushing" of the molecules themselves, i.e. their oscillation and incompressibility. From this follows the spontaneous growth of entropy as we know it. However, if there is no incompressibility of particles, nor their repulsion, then there is no spontaneous growth of entropy as we know it from Boltzmann.

So, it is not that I "doubt" Boltzmann's statistical entropy, but I reveal its limitations. Only the arrangement of "molecules" for possible probability is considered here, from which it can be seen that their vibration is an equally important factor, if not even more significant.

Question: Does entropy increase occupying the most probable states?

Answer: Yes, as everything around us develops in a way that takes up (statistically) the most probable conditions in the given circumstances. The "circumstances" note is also important. This can be seen from the previous calculations of the arrangement of "molecules" according to "possibilities". They are not in the "most probable" states from which they are pushed by others, their oscillations and nudges, which is why they occupy schedules that are "compromises" between the two.

This addition (compromise) is consistent with the "information theory" (mine) in which the force (pushing) is the one that changes the probabilities.

With the loss of information, when the particles oscillate more gently, a drop-in heat (Q) and a drop-in temperature (T) occur. The second decreases faster than the first, the denominator (S = Q/T) faster than the numerator, because entropy (S) increases and particle arrangements become more probable. The variations take on more likely layouts consistent with the above calculation, while the particles give up on more uniform patterns and turn into slightly more bumpy ones.

Equal schedules, as well as equal distributions of probabilities, would have more information — and nature spontaneously escapes from it to less (if it can).

Solenoid »

Question: Your generalization of Hartley's information to complex numbers is very unusual to me, can you give another example?

Answer: I explained it recently (Information) in a very light answer. Read carefully, it is short and convincing, I hope.

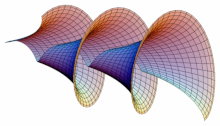

The continuation of this idea leads to the connection of several well-known concepts from quantum physics, primarily those that we express in complex numbers, with "information".

What it is about, it is best to look at the "extreme" example (Aharonov–Bohm effects), which is on the verge of this and perhaps the other side of acceptable physics. In the picture, two electrons traveling together pass by the solenoid on opposite sides. They illustrate the magnetic Aharonov–Bohm effect.

Although the solenoid magnetic field (purple flux lines) is almost completely limited to the inside of the coil, the vector potential А on the outside (tangential to the green coaxial circles) decreases only as 1/r with a distance r from the axis. One of the two paths is parallel А (at the nearest place), while the other is antiparallel. Even in the absence of any Lorentz force on electrons, this difference produces a quantum mechanical phase shift between their wave functions.

At the nearest points of the solenoid, one of the two electrons go parallel to A on one side, the other antiparallel to the other. Quantum mechanically, electrons interact with the solenoid because the wave function accumulates a phase shift from each. For details on these calculations, see the Proseminar on Algebra script (Simon Wächter, 2018, p. 11) or similar.

A physically important quantity is the difference in the accumulated phases between the two paths, one and the other of the solenoid orbits in the image here, or, in the mentioned script, the sum of the paths through the two openings. These differences/sum give the amplitude of the wave function

As we can see, it (ψ) is also proportional to the exponential function of the "information", which now contains an imaginary unit whose square i2 = -1, position q, Planck's reduced constant ℏ and speed of light c, in the fraction multiplying the flux Φ = ∮A⋅dl. Generalized Hartley's "information" is again equal to the logarithm of "probability", i.e. wave function.

I guess that this result is "reality" and classical physics, but it was not obtained in the calculation there due to avoiding the use of complex numbers (Complex). Furthermore, that this phenomenon could have an explanation that I assumed for quantum tunneling (Quantum Tunneling), i.e. proved by the existence of additional dimensions of time (Dimensions).

Due to the law of large numbers (probability theory), I do not expect that this "bypass" through "parallel realities" could be easily seen in the macro world, but a recent article seems to announce this possibility (gravitational Aharonov-Bohm effect).

In a slightly drinkable article about the same "gravitational AB effect" (Emily Conover, Physics Writer), she writes: "If you’re superstitious, a black cat in your path is bad luck, even if you keep your distance. Likewise, in quantum physics, particles can feel the influence of magnetic fields that they never come into direct contact with. Now scientists have shown that this eerie quantum effect holds not just for magnetic fields, but for gravity too — and it’s no superstition..."Interesting description.

Bypass »

Question: What do you mean by "bypassing" reality?

Answer: This is a new possibility from the settings of "information theory" (Dimensions), which the current physics of determinism does not have — a tour of other options. It also implies gathering imaginary into some reality.

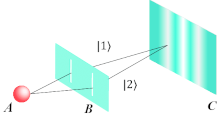

In the figure, the quantum particle-wave from position A passes through one of the two openings B and reaches the curtain C. The functions |1⟩ and |2⟩ are possibilities. Below, at the level of the micro world, where the law of large numbers has not yet been widely swing, and which would turn the coincidences of these tiny states into the necessities of large ones, we use complex numbers for functions and particles, and always real for the observable. How subtle, but important, it is — I will explain.

We do not invent mathematics, we discover it! It is not possible to declare mathematics (theorem, axiom, definition) something that has nothing to do with reality, and consequently the understanding of complex numbers in (my) information theory. Ignoring them in the "world of chance", where the option means some "imaginary" reality, and from which "real" reality can arise again, would mean missing a lot and, among other things, not understanding the circumvention we are talking about here.

Adhering to the principles of information theory (conservation, minimalism and multiplicity), there are options that we write down with complex numbers, which ultimately give real values, and without which something in that reality would be missing. Here are examples.

We know that the square of an imaginary unit (i2 = -1) is a negative number one, so it is a real number, and that there is no real number with the same property. The complex (z = x + iy) is formed by adding the real number (x) to the imaginary (iy). By conjugating (z* = x - iy), changing the sign of all imaginary units (i --> -i), we get an interesting possibility that the number g(z) = f(z) + f(z*) is always real. Namely, it is obvious that g* = g, whatever the function f and its z, and only real numbers are equal to their conjugate ones.

In short, through complex numbers we can get some realities that we would miss without them. Therefore, those functions |1⟩ and |2⟩, in the figure, can both be complex, and in the sum, interference, give reality and real diffraction on the screen C. That is in the description of the known experiment (Double Slit) – the way of theory information. The explanation of the AB effect (Solenoid) is similar.

Question: Where there is the information?

Answer: Okay, I get it, I'll explain that too.

The Function g = (1 + i)n + (1 - i)n will give a real number for each exponent n. If we normalize it, it becomes a candidate for the wave function of quantum mechanics. The square of the intensity of such is the probability of finding an observable (given property by measurement). In particular:

We notice that this "information" is imaginary, non-existent for deterministic physics, and that only their superposition (giving equal chances to both) becomes real. This is something that quantum physics has known for a long time, which has always confused it, but only now, with the new settings of information theory, its observations are gaining better meaning.

The information in these phenomena is hidden in the possibilities of giving meaning to parallel realities. Without them, the whole story of real numbers in mathematics would remain rather strange, or as someone wrote: "The fact there are imaginary numbers in mathematics is proof that humans create their own problems and then cry."

Free particle »

Question: So (Information), a clear example of "information" is a free particle?

Answer: Yes, not just because of the form

By inserting, it is easy to check that the wave function ψ is the solution of its Schrödinger equation, Ĥψ = Eψ. Amplitude A is real, but the function ψ is complex, so the logarithm defines the alleged information

When A —> 0, then |ψ| —> 0, otherwise the logarithm would diverge. There are no other restrictions for these pairs (amplitude, wave function), for almost every wave function of the above form will solve the Schrödinger equation and also their sum ψ = ψ1 + ψ2 + ψ3 + ..., where ψk = Ak exp(iφk) in order for the indices k = 1, 2, 3, ... Hence, by summing analogous Fourier series, the collective wave function appears. You have details about this in my book Quantum Mechanics.

An interesting remark here comes from (my) information theory, for which classical physics has no proper explanation. It's about "Bypass". The solutions of the Schrödinger equation can be such that the mentioned sum ψ has no limited number of items, and infinitely many of them will be of zero amplitude. Each of these summands, the number eiφ for only some of the exponents is a real number (eiπ = -1, and the square of this), and since it is incredible that a particle exists in only two such points, or in a few similar ones, I consider this "remark" more than a hypothesis.

Namely, real quantities are observable, and we get real numbers by calculating from purely imaginary, or complex ones, so I speculate that unrealistic quantities act on actuality from parallel realities, including the past. Therefore, behind the above forms is the deeper essence of the "information universe". It is at least 6-D and such surrounds 4-D space-time, until further we notice as the only physical reality.

The present »

Question: Does "bypassing" have anything else to do with the present?

Answer: Yes, there is more than enough to take it seriously. Some of the connections are very surprising.

Our present is "moving" towards the future, not towards the past, because we have more information from the past, and then there is the principle of least communication. The significant role of "Bypass" in this reason is noticeable.

In some cases, the same reason is better disguised. For example, we know from special relativity (Time Arrow) that the relative time of a moving body flows slower than its own (proper) and that at the moment of passing the two presents will coincide. This means that a receding body will go into the past of the relative observer, and an approaching body will come to it from the future.

When the body is a source of light, it traces space-time with a "red shift" (Doppler effect), by longer wavelengths in the outgoing source, and shorter in the incoming one. They talk about the uncertainty of the position of the photon of the source that goes or comes, and about the probability densities of the position of those spaces. Looking at it that way, the future is more probable.

The second, as well as the first, of the mentioned reasons, is a consequence of the principled minimalism of information, because less informative states are more probable. On a long stick, this again has to do with circumvention, because the information that "comes" that we are talking about actually comes from pseudo-reality.

Similarly, and yet a little different, it would be an observation that what we do not know, which would be real news for us, the inevitable uncertainty without the arrival of information about it, i.e. communication, does not belong to the current world, our present. It is a small (not much) different "absence" of one end of our body compared to the other due to the limited speed of light.

Acceleration »

Question: Can time accelerate?

Answer: Yes, since time can flow at different speeds.

For example, a body in uniform rectilinear motion will have a slower flow of time the higher its speed. The constant increase in the speed of such a body will be accompanied by a disproportionately higher cost of energy and its comparatively slower time. Conversely, the constant slowing of such a body is accompanied by a disproportionately faster flow of time.

If we believe that the "Time Arrow" is a consequence of entropy, and that the probability of distribution is important to it, then the law of large numbers of probability theory is inevitable. In large-scale distributions, differences in close possibilities are less important, and their chances are statistically equal.

In other words, if the substance of the universe melts into space, it disappears and space grows. Then the more probable schedules will become more significant in relation to the slightly fewer probable ones, and at the same time the crowds will be reduced, together they will affect the acceleration of the "arrow of time". We are talking about the diminution of the substance of the universe, where we define the flow of time by the volume of outcomes, so that a smaller number means a relatively slower flow, then the even slower of the flow of time of our present in relation to some (imaginary) observer fixed in the past (Growing).

The process of space formation, over time, as substances become less and less, will be increasingly opposed by the "entropy" of space, which differs from Boltzmann's in its optimum. Namely, if the upper mentioned balls are indistinguishable, then they are arranged according to a different distribution, and pushing will be missing. Their optimum is then between the smallest extremes, of all the mentioned balls in one of the boxes to each in one. The most probable change in space will be its shrinkage, with the cessation of the "acceleration" of time, and perhaps a complete cessation.

Recursion »

Question: How can a "quantum of information" affect the future of everything, the universe?

Answer: As a deterministic theory of chaos, except that information theory omits the adjective deterministic (1.1.5 The universe and chaos, in the book Space-Time). In addition, there is no "something" that changes "everything".

Perceptions are always in finite packages, discrete, most innumerable, and therefore the universe of experience is periodic. In addition, it happens that "Waving butterfly wings in Mexico results in a storm in Texas", as metaphorically expressed by Edward Lorenz (Butterfly Effect), one of the founders of chaos theory (1961). But let us consider one by one briefly.

For example, consider a simple recursion (x → x2 - 1) where we subtract one from the square of a two-digit decimal number and keep only the first two digits. Whichever number we start from, for no more than a two-digit number of steps, some of the numbers must be repeated and the string will get stuck in returning like that again. In the given case, it is periodic through 44, 19, 36, 13, 17, 29, 84, 71, 50, 25, 62, 38, 14, 20, 40, 16, 26, 68, 46, 21, 44.

Recursion (lat. recursio, recursion from recurrere: return) in mathematics and computer science means a procedure or function that uses itself in its definition. We take recursion as a model of periodic processes, and due to duality (processes and states, linear operators and vectors on which the operators act) and as a model of periodic states.

Decimals of rational numbers are repetitive strings (Waves). The numbers they represent form a countably infinite set. Opposite them are non-periodic decimals of irrational numbers, a continuum of them, one of the countless "immeasurably" infinites larger than the countable ones. Irrational examples are the root of number two (√2 = 2.41421 ...) and the well-known ratio of the circumference and diameter of a circle (π = 3.14159 ...). It is interesting that the numbers of irrational decimals would pass the "coincidence test", they would behave like real random numbers in the first draw, although we know that they are not.

Unlike discrete outcomes (events of reality), whose infinity is countable (ℵ0), the possibilities of the universe are a continuum (𝔠). From that, in the first step, follows its unpredictability.

With its smallest of possible infinities, the world of reality barely manages to retain attributes such as uniqueness, singularity, or multiplicity. I remind you that the law of conservation applies to information, and on the other hand, that repeated "news" is no longer news. This is the root of all the strange differences within that confusing world, even the "butterfly effect", which, seen in this way, is more the normality of the "universe of the possibilities" than the "deterministic" one.

However, unpredictability is at least so much above our power of cognition (they are far more, but that is not important for this part of the discussion) that there can be no realistic expectations that we could ever know everything, that such ability was given to us at all, that there is "something" that could change "everything" in itself.

Refraction »

Question: Do you have anything to say about the refraction of light that you did not say anywhere?

Answer: I have one interesting speculation that I still keep in reserve. What if the refraction of light through Newton's prism (split light) is not an indicator of the constant composition of white light, as it is the distribution of probabilities written in color?

In the picture, the incoming white light at the exit from the glass prism is divided, from top to bottom into: red, orange, yellow, green, blue, indigo and purple, from 750 nm to 380 nm (Wavelengths). The largest wavelength of visible light is red, and the shortest is purple. The greater the wavelength of the photon (particle-wave of light), the greater the positional uncertainty, the lower the probability of finding it in a given place, which from the point of view of "perception information" means less possibility of such perception.

This part of the description is not the announced "speculation", but the one that follows when we change the usual description, that the specific components of white light are not in the given colors, but they are in the distribution of all those wavelengths (probability densities) we see at the output spectrum. From the new interpretation, I took the explanation of the Compton effect, which I have written about several times (Scattering).

It is not a problem that the same interpretation "explains" the refraction of light. Namely, red wavelengths speak of lower probabilities, some, of something, so it is logical to add this abstract notion of probability to the chances of its interactions with the environment through which they pass, in relation to the perception of the device (us), the subject of perception.

That is why red light turns the least, and purple the most, because in that order is their power of interaction, the probability of "getting stuck" by penetrating through the optical medium. So, this "speculation" is not without basis and that is why I keep it in "reserve". More precisely, I consider it to be the possibility of being the other side of the same coin, as an ellipse that is a conical section and at the same time a curve of the second order.

In mathematics, we are already very accustomed to encountering the same appropriate theorems in different fields, and then to find the same physical interpretations from those different domains. Maybe it's time for physics to start seeing something similar.

Win Lose »

Question: How would we rank strategies game to win?

Answer: Inquire in some other places (Good Strategy).

Mathematical game theory and information theory are very different in methods, with few elements in common. A stronger, more vital game in the way of information theory is defined by its greater "Information Perception" (S = ax + by + cz + ...).

That is why the tactics of retaliation are from the "first league". These are the strongest games, which with the higher coefficients (actions) of the first competitor (a,b,c,...) correspond to the bigger of the opponents (x,y,z,...), and the smaller ones to the smaller ones. They give the highest score (S) and mean the highest vitality of the competition. Just it. However, there is no such thing as "information perception" in the game theory, but the results there will be consistent. The weakest game, from the "third league", will be avoiding, thus combining weak with strong actions (coefficients of factors).

Between that "first" and "third" league is the "second", whose practitioners progress to victory using the sacrifices too (gambits in chess, investments in the economy, hard to succeed at work). I do not deal with further details of tactics in games to win, except for classification in these three categories (Tactics).

The first league is only recently discovered by mathematical game theory (Tit-for-tat), the second is usually called lose-lose, and the third is gain-gain (win-win). I know that they are studied in better schools of economics and that these tactics would be valid in their own way in politics and war. In politics, for example, the tactic of compromise (gain-gain) is popular, which is a kind of description of it work as "skills of the possible", plus manipulation.

Every tactic of the first league will beat every of the second, and every second every third. This is common to these two theories, in terms of games. Let's not forget that together with the word "every", in matters of probability, should stand "almost".

Added to this (third league), the skill of lying, cheating and manipulation can raise politicians toward the level of the second league, and there somewhere in between is their ceiling. That is why politics, wherever it interferes with higher-level skills, will spoil things, lowering them like the levels of a chess game of a grandmaster who leaves the continuation of the game much worse than himself.

That is why politicians need the strength of institutions, police and the army to dominate. Naked they would be powerless because their tactics are so bad. This could be understood as a "happy" circumstance (for freedoms and development of society), provided that politics wants and manages not to interfere in matters that should not concern it. Unfortunately for the success of the people, power is a delight — it is not said in vain.

Sneaking »

Question: Can tactics from the first league be overcome by second-class ones?

Answer: A good choice of words is to "overcome", or to outwit, that is, to bypass, because by direct conflict it (Tit-for-tat) cannot be defeated by worse tactics, someone from the second or third league. We talk below of the above question (Win Lose).

The picture on the right (Sneaking Jaguar) is a sneaking of predators that will reduce the options for defending the prey, when it is clear to the victim that the fight for life and death has begun. The lion will additionally discourage the catch with a roar, from the possible intention to oppose.

In his perhaps greatest victory (Napoleon's Masterpiece), at the Austerlitz in 1805, Napoleon demonstrated one of the best tactics of the "first league", in terms of unpredictability and timeliness. But it is rarely mentioned in the literature that he further strengthened his future success by hiring actors from the French theater. He hired these professionals on the eve of the main battle, to meet with enemy envoys.

The actors were "exhausted and sick soldiers", they successfully imitated discouragement, the tendency of disobedience with the desire to rebel against their emperor. They would repeat sadly "just so that there would be no war", they would complain about the lack of food, clothes and weapons. It is interesting that Kutuzov saw through the deception, but others did not believe him, and Napoleon, with this "strange" act, superfluous, they would say, managed to underestimate him in that famous battle "Three Kings".

It has been the usual style of Britain and other successful empires throughout history, to infiltrate foreign territory and continue to conquer by pressing and calling for compromises. They come to some territory (India, North America once), stick a flag in the ground and "declare" it their own, I paraphrase. The natives or villagers around might wonder at "them fools", but they ignore it and slowly, systematically tighten residents with the organization, institutions, army, police. After a while, the new territory becomes their dominion.

It is a simple tactic of "false information", which, if it succeeds, will avoid the possibly invincible resistance of some of the variations of the first league. Slightly greater pressure from one side and calling for a compromise (win-win) makes imperceptible progress, and the opponent withdraws — until it is late.

We have seen with Napoleon that underestimating oneself can give an advantage over the opponent, but it can also cause a decline in one's own fighting spirit. It is known how much "peaceful ideas" successfully spread among enemy troops help in the victory against them, as well as planting misinformation there.

A lie is a hidden truth (Information Stories, 3.7 Dualism Lies) and, consistent with the principled minimalism of information, it has some advantage over the truth in spreading. But it is also covert information, so it does not have its full force. That is why it is easily defeated by the truth, it is unreliable and unstable. However, such is ideal for preparing the ground by deception, in order to mask and block the best tactics (Tit-for-tat).

Multilogic »

Question: What will happen to polyvalent logics?

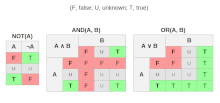

Answer: Polyvalent logics (true, maybe, ..., false) can all be reduced to binary (true, false), so it may seem unnecessary, but it is not so. Multivalued logics could be applied in quantum computers, primarily through "superposition", or the distribution of probabilities of accuracy, which would flow through their "circuits" (gates).

But at the same time, the usefulness of multiple-valued logics in interpretations of the "intensity of lies" will be visible due to the needs of the (new) theory of information. For example, in Sneaking, by suppression which tactics from the lower league bypass and "overcome" the tactics of the higher league (in games to win).

There are various n-value logics. Not only must n be an integer greater than two be given, but it can also denote a continuum of different values of real numbers, say between 0 and 1. The unit is more often "true", zero "false", and the values in between are "maybe" levels. All these logics are equally mathematically correct, but it is difficult to follow them all at once, so that the development of their applications could highlight and evaluate some according to their own needs.

The negation of the true in all is false (¬1 = 0), and the negation of the false is always true (¬0 = 1). In Łukasiewicz's (1920) "maybe" (undefined) changes as well as probability (¬u = 1-u), while in Gödel's logic (1932) negation may give false for all logical values except false which then becomes true (Many-valued logic).

The disjunction (operation "or", that is ∨) of the statements within these individual logics will, for the most part, give the value of the largest value of the operand, and the conjunction (operation "and", that is ∧) the least. Therefore, in terms of the most well-known operations of logic (negation, disjunction and conjunction), multi-logics are also reduced to binary (n = 2), so this will also prove practical.

Inclusion (if then) is especially interesting in logic in general, primarily because of its applications, but also because of its relationship to the method of contradiction (Deduction). The implication (u → v) is (mostly) "true" when the truth value of assumption (u) is not greater than the consequence (v). Otherwise (u > v) the value of the implication "true" (one) is reduced by the difference between these two (u-v) in Łukasiewicz, or equal to the value of the consequence (v) in Gödel.

In all variations of these logics, the rules of deduction of multi-valued are reduced to the corresponding two-valued ones (together with u → v = ¬u ∨ v). It is clear that the method of contradiction will not be fully valid for logics that, by negation of "maybe" gives again some "maybe", which we have, for example, with Łukasiewicz, but not with Gödel.

Lies Power »

Question: Are there "waves of lies"?

Answer: It is possible to talk about the "ripple of truth" formally and mathematically very correctly, if we are able to stick to its abstract concept.

Louis de Broglie's idea (1924), that all matter is in waves, is widely accepted in physics. It has recently been established that space is a "ripple", from the experimental confirmation of gravitational waves (2015), which followed the general theory of relativity (1915) and its similar prediction even before De Broglie's waves of matter.

Mathematical forms such as numbers, spreadsheets, geometric shapes, free networks designs, and the like, come from the immaterial world if applied to the material world. In other words, periodic changes and waves in general are abstract rather than concrete in nature (they are not for touching, smelling, listening, watching).

Multi-logics are a "rough" but useful approximation when we talk about outcomes (estimates) whose truth we cannot accurately discern at a given time. In everyday life we are surrounded by such states, whether we are aware of them or not. The implication (if A is then B) is the more accurate the assumption (A) is less accurate in relation to conclusion (B). If this sentence sounds strange to you, then read something about the algebra of logic and the mentioned attachment.

Well, a more incorrect assumption will make a more accurate deduction, which will, conversely, mean that a "convincing" deduction can easily be a matter of big lies. This is consistent with the principle of skimping on information (truth) in nature, or the principle of the least action of physics, I remind you, that is, noticeable but misinterpreted the easier spread of lies than truths on social networks. Deduction inflated with lies seduces us and attracts us with the power of its concealment of the truth.

Lies are hidden truths (Dualism of lies). This is all their strength of attraction, as in the physical fields of force, analogous in the journalistic media, but at the same time one of their significant weaknesses stems from that. Because they are deficient in truth (information, action), untruths have less "force" than uncertainty has (Action) and are more easily "defeated" by truth. They are therefore unstable, soluble in truth like snowflakes in strong sunlight.

If we follow the mentioned course of "floods" of lies and their "evaporation" before the truths, which can come in shifts similar to the seasons, winter snows and summer heat, we can formalize them into cycles. These are real periodic phenomena of our daily communications, but, I say, they should not be taken literally as waves of some given substance. They are closer to the functions of mathematics, such as electromagnetic waves from Maxwell's equations, or Fourier series, Schrödinger and matrix quantum states and processes, more than concrete phenomena.

The new waves of logic are not exactly from the world of known equations of applied mathematical analysis, but the models we will find there will seem to us to be. I derive this from the (hypo) thesis on the information structure of space, time and matter, from where the stress and ripple of space itself (due to gravity) is in its deepest essence a story analogous to spreading lies on social networks. Moreover, at the beginning of this answer, the mentioned Дe Broglie's waves are of a similar nature.

By the way, the stories about truth and lies, as well as about good and evil, are ancient, and that is why, emphasizing that, I took the title picture (Father of Lies) from one of them. However, the nature of science and mathematics is for them to question themselves, so if they need to go further, I did not burden you with the content of the article.

Kepler »

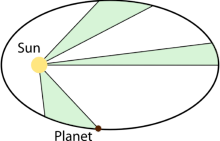

Question: Does Kepler's Second Law apply beyond gravity as well?

Answer: Yes, all the constant forces of a given center (besides gravity) will try to move the corresponding charges so that the pull (the length that connects the points of the source of force and charge) erases the same surfaces at the same equal times.

Namely, this is because that surface is the equivalent of communication between them, and the law of conservation information applies. This would, of course, be a new "hypothetical" explanation of (my) information theory, but the formal proof goes without it.

In short, these surfaces are decomposed into infinitesimal intensities of the vector product of the vector (r × dr), positions of these charges (r) and changes in their places (dr). The derivatives of the time of these small areas will give two items whose sum is zero (v x v + r × a = 0). These are vectors of velocity (v) parallel to themselves and acceleration (a) of the direction of the radius vector (r), the motion of the charge, because these products of parallel vectors are zero. I'm paraphrasing.

You will find details about this in my script "Notes" entitled "8. Central Movement ", p. 30. and continued in „10. Central Movement II ", p. 36.

An example of this "Kepler's law" is the absence of force. A material point is a charge that moves uniformly in a straight line next to zero force at a given point O, crossing equal paths |AB| = d for the same times. The distance h of the direction of movement from the point O is also the height of the triangle ABO of the base AB and the constant surface hd/2 — at equal times.

The absence of force is also the "constant force of a given center". Centrifugal, or opposite Centripetal force, which are created by rotation at a constant angular velocity ω, are a similar example. Tangential velocity |v| = v = ωr the rotation of a point on the radius r and the acceleration of the resulting forces a = ±|a| we know the formulas of circular motion a = v2/r, agree with the above formula of two sums (v2 + ra = 0).

Constant central forces are created by constant rotation, so "Kepler's law" applies to them as well. However, the charge will move by "conical cross section" (along the line of an ellipse, parabola, or hyperbola), iff (if and only if) the force, otherwise "constant central", decreases with the square of the distance. Such are the gravitational and electric forces.

When the above formula "two sums" is multiplied by the mass (m) of charge, we get an expression that tells us about the constant sum of kinetic and potential energy (mv2 + rma = 0). On the surface of the Earth, where our heights (h) are negligibly small in relation to the distance from the center (r), so we can say that the Gravitational acceleration is approximately (a = g = 9.8 m/s2) and the Potential Energy is proportional to the height (Ep = mgh). When the body falls, it loses potential and gains kinetic energy (Ek = mv2/2) so that the sum of the two is constant (Ek + Ep = const).

The force to the mass gives acceleration (F = ma) and the work of force on the road is energy (E = Fx), we know from physics classes in high school, so here we have that the work of that falling body will be equal to potential energy (Ep = Fh), or after the fall to kinetic. Adding (integrating) all the increments of force in turn for different potentials, we get the known Newtonian gravitational force that decreases with the square of the distance (F = GMm/r2).

In the mentioned script (Notes), I proved that the (constant central) force decreases with the square of the distance iff it moves the charges along the conics (cone sections). As the continuation can be proved, that the trajectories of the charge are conics iff the action of the forces discussed here extends at the speed of light, that action of force will propagate at the speed of light iff that force decreases with the square of the distance. The latter, I think, is already known to physics.

Surface »

Question: Where do you see information like a surface?

Answer: It's everywhere there is a sense to talk about "spaces". What advantage I give to mathematical doesn't mean much, if everyone else can be consistent enough.

For example, among the first are vector with many representations such as oriented segments and polynomials, then solutions of differential equations, or particles-waves of quantum physics. In general, linear algebra spaces should be distinguished from metric spaces of analysis, or classic geometric, although they can have many similarities.

In that group, at least I was initially imagined, there was "Information of Perception". Namely, it is a scalar product (S = ax + by + cz + ...) of the two vectors, subjective (a,b,c,...) and objective (x,y,z,...) information transfer participants, i.e. interactions. The idea of this theory is that there is no "something" about what nothing else has some perception. And regardless of the number of components, such two vectors span one "plane".

Different examples are physical. Perhaps the most fun of such examples arise from the resolution of the Stephen Hawking's black hole paradox. Here is more interesting the fall of a body into a black hole, but the radiation from it. From the position of the relative observer, the time of the body that decays to the event horizon runs increasingly slowly, and the radial units of length are shortened, so that in infinity the time stops and the lengths become zero. The body is stretched and cling across the black hole's surface leaving its information as a new sphere around it.

The physical example would be the generalization of the Second Kepler's law, or the virtual sphere (Fields). This second is the state of quantum entanglement in the interpretation of the theory of information.