March 2022 (Original ≽)

Gloves »

Question: What is the EPR paradox?

Answer: The Einstein – Podolski – Rosen paradox (EPR paradox) is a thought experiment proposed by physicists Albert Einstein, Boris Podolski, and Nathan Rosen (1935), who argued that the description of physical reality given by quantum mechanics was incomplete.

In their discussion of the completeness of the description of physical reality using the mathematics of quantum mechanics (Phisical Review), they assume two physical systems I and II that can interact from time t = 0 to t = T, after which it is assumed that there are no further interactions between the two parts. It is assumed that the state before t = 0 is known. Then, from the Schrödinger equation, the state of the combined system I + II is calculated, for each time including t > T, and the obtained wave function is Ψ.

However, it is not possible to calculate the states of some of these systems after the interaction. For such, quantum mechanics requires new measurements in a process called "reduction of the wave packet". A short description follows.

Let a1, a2, a3, ... be the eigenvalues of some physical quantity A that refer to system I for the corresponding eigenfunctions u1(x1), u2(x1), u3(x1), ..., where x1 stands for variables used in the description of the first system. Note that this is the terminology of linear algebra (quantum mechanics), where Au = au means the action of the operator A (quantum process) on the vector u (quantum state), after which it appears multiplied only by the number a. The square of the intensity of this number, |a|2, is the probability of observation.

Then Ψ as a function of x1 can be written in the form

The set of functions un un(x1) is determined by choosing the physical quantity A. If we choose another quantity B with eigenvalues b1, b2, b3, ... and eigenfunctions v1(x1), v2(x1), v3(x1), ..., the previous equation becomes

Therefore, on the two measurements of the first system, the second can be left in two different states. On the other hand, as there is no interaction between the two systems at the time of these measurements, there is no real chance that measuring the first will affect the results of the second. This is the EPR paradox, or as we call it today "quantum entanglement".

Einstein sought a way out of this paradox by challenging the mathematical foundations of quantum mechanics (linear algebra), or at least believing that there must be some "hidden parameters" yet to be found.

He explained this problem with a pair of gloves, one placed in an anonymous box on the ground, and the other sent very far away, without knowing which of the boxes was in which. After opening the one on the ground and finding out, let's say the left-hand glove, we immediately know that there is a right-hand glove in the other box, although there are no additional measurements or interactions between the boxes, no matter how far away they are (that even light cannot cross that path in a short enough time).

Thirty years later (1967), John Bell challenged Einstein's proposal for hidden parameters, proving the contradiction of such an idea, and in the decades later is followed through experiments that confirmed the "quantum entanglement" considered impossible in the EPR thought experiment.

Question: It is not clear to me in this explanation of the EPR paradox (quantum entanglement) how it comes to replacing the quantity A with B, the first eigenvalues with the second, in order to achieve the "impossible" action of the first on the second?

Answer: You should also read the original (Einstein-Podolsky-Rosen) article from which their point is drawn here. These are, for example, two particles, A and B, which interact briefly and then go in different directions when they do not interact further.

According to Heisenberg's uncertainty relations, it is impossible to accurately measure the momentum and position of particle B, but it is possible to measure the exact position of particle A. Based on this, the calculation can show the exact position of particle B. Alternatively, it is possible to measure the exact momentum calculate the exact momentum of particle B. It is paradoxical that in this way it is possible to have both the exact values of both the position and the momentum B.

Question: What is the answer of the information theory to the EPR paradox?

Answer: That is the crucial question of that theory. It is necessary to reconsider the notion of "reality", and the support for this goal is proof of the dimensions of physical space-time (Dimensions), i.e. the additional dimensions of time. The core of resolving the EPR paradox is the adoption of the fundamental uncertainty of this world and its predominant texture built by information. Until that the dominant relationship is between the subject and the object of communication, and not, say, of the some constant, unchanging matter.

In short, if subject A can (and does not have to) communicate with B, then the two are mutually "directly real." If B can further communicate with C, then also A and C are at least "indirectly real", not necessarily directly. Any further "reality", say A with D if communication with D is possible for C, at least is "indirect reality". It is the background that goes in the package with the mentioned additional dimensions of time.

Call these "indirect realities" whatever you want, but notice that they are not just "parallel realities", nor "worlds of quantum mechanics", neither "pseudo realities", nor more simply understood different universes of a "multiverse", although they are very similar. They are in originality, an idea in development, and it is hard to believe that someone once guessed it correctly.

For example, the past is a type of indirect reality with which the present has no direct communication, but only one-way information (interaction). Dark matter could (at least in part) be another, for now "undiscovered", course of gravitational interaction from the past to the present. However, there are parallel realities beyond the past and the present.

This is what the EPR paradox, or quantum entanglement (Surface), is about. When for the coupled quantum states, A and C, there is an indirect "real" state B with which both communicate directly, i.e. which are directly real to it, then it does not matter how far A and C are physically distant in some of their (any) presents.

More precisely, if A and C are simultaneous in relation to some B, then changes to A can be simultaneously reflected in C, although from some other position (present) this "simultaneity" may be questionable.

Bell »

Question: What does Bell's theorem (1964) talk about?

Answer: In 1964, the Northern Irish physicist John Bell derived mathematical proof that certain quantum correlations, unlike other correlations of the universe, could not result from any local cause (The Theorem).

This theorem has become central to both quantum information science and metaphysics. Even after 50 years, scientists and philosophers still disagree about its exact meaning, experimenting and theorizing, finding that the quantum correlations she talks about still have "holes" (Bell’s theorem reverberates).

Physical phenomena whose effect spreads no faster than the speed of light have local causality. Locations are typical relativistic situations, or, for example, after the decision (free will) to turn on the radio, receiving that signal on the Moon after 1.3 seconds (how much light is needed).

Localities are also correlations that have an explanation. Suddenly, a similar opinion emerges between two people who become concerned about the Ruritania war after reading the same newspaper — although those people may be very far away at the time of reading. These less obvious local causals are called the consequences of the "principle of common cause".

When we go one step further into the unknown, into our inability to know the "common cause" due to insufficient information, then even further into the inability to know the possible cause in general, we come to phenomena whose effect we might think spreads faster than light. It's like "spooky action at a distance", as Einstein said about the "EPR paradox" (Gloves).

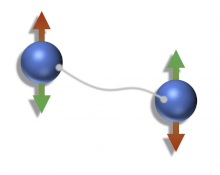

In his famous theorem (see above), Bell considers a pair of spin particles ±½ that are somehow entangled and then diverge so that their total spin remains zero. The mentioned "common cause" is then the law of spin conservation, and the uncertainty is contained in the ignorance of which of the two particles has spin +½ on the basis of which we could report the conclusion about spin of the other.

Bel introduces the parameters λ in the calculation of probabilities and shows that the calculus is predefined with them. Einstein's idea of "hidden parameters" thus proves contradictory, and "instantaneous distance transmission" is possible in the conditions of measuring quantum entangled systems.

Question: Do you have a explanation from the point of view of "information theory"?

Answer: Yes, such is the already mentioned "step further into the unknown", and then the elaboration of that idea. The point is that the existence of a "common cause", as well as the existence of any cause, will reduce uncertainty, and with-it information.

Therefore, the current "remote" transmission is not in the true sense kind of the transmission, neither information nor action. It is a pseudo-transmission, a grade of hidden information, i.e. "indirect reality", with which we come to the explanation of quantum entanglement that I gave in the previous answer. I will summarize.

Quantum entanglement, say two states A and B, arises in such a way that they are simultaneous in relation to some third state C which may or may not be directly realistically given to the observer (experiment).

Thus, a pair of gloves in two anonymous boxes, before their separation, is preceded by the "simultaneity" of the moment of their packaging. The reading of the news about the war in Ruritania was preceded by the printing of newspapers, i.e. the decision of the editor to write about that war. The divergence of the particles of the opposite half-spin was preceded by their event of entanglement at a given place at a given moment.

But looking even further, in terms of (my) information theory, as this pseudo-cause will not be the cause in the classical physical sense, the quantum correlations discussed in Bell's theorem (1964) do not arise from "any" local cause.

Confidential »

Question: Do you have any knowledge of quantum entanglement that you would not tell anyone?

Answer: Of course! In science, these are "failed" attempts, and who knows if they are. Then I have hypotheses that are almost reliable, speculations that I am soon sure are valid, but somehow they are not for the public.

Instead, I’d rather tell you here something I may have needed and didn’t, about certainty.

Reading the previous two answers (Gloves, Bell), you may have noticed that certainty comes from simultaneity, and coincidence from the passage of time. Thus, universal legality, such as the law of conservation, or the minimalism of information, and even multiplicity, will emerge from a "common cause" from some past, or pseudo-reality in general, from another time, to leave a long mark on our present. Boil it and let's move on! (the correspondence was private)

The virtual sphere of a photon around an electron (revised Feynman diagram) is an example of simultaneity. Let us generalize it into a model of some universal law, especially if we let the "sphere" expand indefinitely. It is 2-D, as well as information, and space is 3-D, so in addition to a bundle of similar ones, there is also the possibility of additional deviations from natural laws, for the so-called recreation (Growing).

Developing this (hypo) thesis further, we would come across questions of "space" from which such "spheres" come, and then speculations that our universe, its space-time, could be larger than 6-D. But, I would tell you about it later, maybe when that topic is not one of those that I "would not tell anyone".

Sameness »

Question: Why can't people be equal and everyone love each other?

Answer: Ha, ha, how pathetically naive. Try to see the beauty of multiplicity, in the diference of men and women, in the diversity of types from success to failure, in victories through efforts and rewards, happiness in achievement (Standing Out).

It is not the goal of life to die as soon as you are born, nor in mathematics to see a solution when you hear about a problem, but the one in between. If you didn't experience diversity it is as if you didn't even live, you didn't "exist" if you just followed like a sheep.

Try not to be submissive and to seek the uniformity from all, but recognize the attraction of this world where birds fly, fish dive, grass grows, where some inhale oxygen and exhale carbon dioxide, and vice versa others. In the fact that no one has yet listed all the existing forms of life on this planet, and no one will ever list everything possible.

In short, the world exists because of diversity, it runs away from equality and that is a non-negligible reality. This is because information is a web of space, time and matter, with uncertainty in essence. Repeated "news" is no longer news, and yet there is no way that something that is will no longer be (the law of conservation). In the end, because things which are more probable happen more often, and those are less informative.

Question: How is it possible to constantly change everything, but not-disappearing and allegedly reduced?

Answer: Everything flows, everything changes (Heraclitus, around -500), details cannot last and the totals are always the same – that is the miracle of this world, but for now the information theory is doing well with it. For that reason alone, do not hope that my explanation will be drinkable.

First, it is possible to constantly "progress" (Chasing tail) in the dark, where no subject can have everything and is therefore forced to communicate. This is a world of layers of uncertainty, and some of those layers are at your fingertips. In order not to repeat myself with the explanation in the link, let's imagine a tape, a belt, normally connected in a circular orbit with arrows in the same direction that never stop in that whirl.

Imagine a wider environment of the tape that is constantly changing, in relation to which the arrows always become different. In addition, if the time of such an environment were to slow down permanently in relation to some fixed moment (event) of its past, we would have truly irreparable changes. That something similar is happening to us is the main (hypo) thesis of information theory that I am currently working on (Growing).

Question: I recognize this in the "melting" of substance into space, so the constant growth of the entropy of the substance, and the "suppression" of information from the past to move the present towards the future. Is it a "battle" that uncertainty loses over certainty?

Answer: Yes, from that point of view, reducing information means losing options, less uncertainty, that is more predictability, regularity, order. And only uncertainty needs the passage of time, while one (neverendingly) present (Confidential) is enough for certainty. With the above, this continuation is also in line with the fact that in the deficit of information, which otherwise cannot "live" without change, we find something ubiquitous, such as rules, canons, or natural laws.

In the concept of quantum-bound information, (energy) × (time) = const, when certainty is a kind of uncertainty, there will be null energies of the idea of unlimited duration. In addition to some natural laws, I consider the possibility that mathematical statements (theorems) could be such.

Graph »

Question: I don't understand those "limitations of deduction", how does it "travel the static world of truth, as well as the train on the previously established network of its iron rails", or how do you connect it with "game theory" (from a private conversation)?

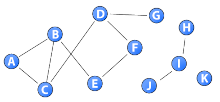

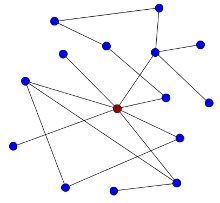

Answer: Imagine a simple graph of nodes and a links that, as in the picture on the left, consists of at least two separate parts. Nodes A-G are all somehow connected into one group, the other is I-J-H, and the third is just one node K. There are no connectors between these groups.

With deduction (if … then) we can drive links from node to node, but we cannot move from one group to another when they are not bound. That would be a simple visual explanation of the difficulties, that is, the limitations of deduction. Note that the existence of a difficult path can be an insurmountable obstacle for those who would follow the lines of least resistance.

Such a situation, much more complex, would be in the methods of "painless and effortless" acquisition of knowledge and skills, or success through life, once noticed by Erich Fromm (The Art of Love, 1989). That doctrine hinders people from learning the skills of living, because it gives them a false idea that everything, even the most difficult tasks, should be mastered with as little or no effort as possible, he wrote.

Let us now pay attention to the closeness of these ideas with the way of "information of perception". When we say "no pain, no gain", or "per aspera ad astra" (through hardships to the stars), we are actually talking about a higher intensity of "levels of play" when we oppose greater temptations with more initiative and less with less. Here we come to the division I am talking about when asked about game theory (Win Lose).

The first league, the most successful in winning games, are the tactics of "reciprocity" (Tit-for-tat), which has recently been known in game theory itself, and in (my) information theory follows from the known maximum of information perception at the time. The second league would be a group of games of victory that we can call "sacrifice to success" (lose-lose), because they irresistibly remind us of the previous explanation. They would be all tactics between the first and third leagues. The third league would be about "win-win", i.e. the skill of compromise, or politicking.

Lies, spreading misinformation, traps and intrigues, added to many of these tactics can raise them to a slightly higher level, but not too much (Sneaking). These are like special tools for special purposes and unusable outside their application environment. On the other hand, lies, repressed information, are attractive because of the principle of minimalism, but they are short-lived because of the same deficit of information, soluble before the bare truth.

The problem with deduction is similar. Unlike guessing, associating, or proof by contradiction, it needs bridges across the abyss, smoother crossings, and therefore can easily be isolated from greater achievements, as well as playing with lower-level tactics.

An example of such a leap of knowledge in modern physics, which could hardly be reported by deduction itself, is quantum mechanics in general, and quantum entanglement in particular. In the evolution of species on Earth, the appearance of us humans would be an example of a leap of intelligence that enabled us to see the world around us in an exact, scientific way.

These and similar examples of achievement are also a demonstration of increased vitality through perception information. I hope it's obvious, and if not, remind me to discuss that, but on another occasion.

Inequality »

Question: What is Bell's inequality?

Answer: Bell's first theorem (Bell, 1964) took many forms over time, for verification in experiments, but also for easier understanding of its essence (Information Stories, 3.10 Bell Inequality).

The simplest such situation is the separation of entangled particles of total spin zero. The picture on the right shows an example of a pair of such, electron ferromagnets. Coupled electrons in quantum mechanics are visualized by a connected filament, so that "turning up" on the left electron (red) causes the other electron to "turn down" (red) and vice versa (green).

This is the spin of an electron (± ½) whose total spin after the entanglement is kept zero, which follows from the law of spin conservation. According to the relations of uncertainty, we further know that before the measurement (by passing through a magnetic detector) there is no way to know the exact spin of an individual electron, that in fact such does not exist. Information theory explains measurement as the communication of the subject and object of measurement. After that exchange of uncertainties, the properties of the electron become real.

Unlike official physics (Bell's theorem), here we view the situation of "quantum entanglement" as a kind of "simultaneity" of these two electrons. Measuring on one is at the same time measuring on the other, so the total spin is always zero. Therefore, there is both the uncertainty of measuring the spin of the first electron and the uncertainty of the spin of the second. However, the notion of simultaneity for subject and object differs.

Now imagine some more complex quantum entangled system (particles) that are simultaneous for themselves, but not simultaneous for the external observer (measuring apparatus). When an outside observer uses some inequality of algebra, which would otherwise be true in the case of variable independence, it need not be accurate in an application where their dependency does not exist. This confuses these "strange" experiments (Quantum Entanglement).

Quantum »

Question: What could be a "quantum", as you explain it?

Answer: The physical annotation of a quantum is the change in energy over time, ΔE Δt = h, or the change in momentum in the path, Δp Δx = h, where h ≈ 6.626 × 10-34 m2kg/s is equal to the Planck constant in both of these numerical interpretations (Quantum Physics).

Informatically, for example, a quantum is an elementary physical event, or the smallest outcome (random events) transferable through physical space-time, that is, it is a present. This definition is easily attached to the above, among other things due to the interpretation of quantum entanglement by (my) information theory.

Two particles (molecules, atoms, electrons) do not have to have at least their own "simultaneity", but to be like the physical bodies we know (until light passes from end to end, some time passes), again if they have it, then they are "quantum entangled”. As an example of that simultaneity, I mentioned the "virtual sphere" (the photon of Feynman's diagrams) that expands from the electron at its center. Its wavelengths do not change and the momentum that transmits to the second charge is constant, but its amplitudes decrease, so the probability of transmission, the interaction decreases.

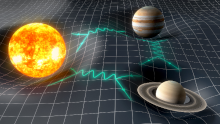

However, even with the largest bodies, say stars, we can imagine a similar simultaneity. Such is the sphere of the gravitational wave that propagates from the center of gravitational force. In each of the series of moments, the whole sphere is a "virtual graviton" and remains so until the eventual interaction with another body on which it can act gravitationally, if that action occurs (Fields).

Question: How to know a quantum exists?

Answer: In addition to Planck's well-known ideas about black body radiation (Planck's law, 1900), Einstein's Photoelectric effect (1905) through Quantum mechanics and further in such areas of physics, there are not so well-known views (mine) about information theory. The first that I will mention concerns the meaning of time, the second is about the law of conservation, and the third introduces certainty as the absence of uncertainty.

If there were no (flowing) time but only one present (space and matter), then the world would be reduced to one total causality. All events (ever) would exist at the same time, inseparable in terms of cause and effect. The second quantum mentioned above, which speaks of the product of changes in momentum and position, could then make sense even if we consider both factors to be infinitely large. The first would not fit into such.

Because time flows and we have different presents, such as we have, our space-time is 6-D (Dimensions). But the currents of the present, its transitions from the past to the future, are subject to the laws of conservation. Therefore, they must be finite from the bottom, finally divisible, or say discrete, that is countably infinite (like a set of natural, integer or rational numbers). Otherwise, they would be infinitely divisible, and infinities could be added or subtracted at will in a way that these conservation laws would not apply.

Third, information is a measure of uncertainty. It is the quantity whose real value we can edit by size. That is why it appears as a complex phenomenon that can be crushed, dissolved, but its smallest amounts in the end are packages of pure uncertainty. By subtracting the uncertainty of that smallest package, the quantum of information, we would separate its parts with less uncertainty, that is, the reappearance of certainty (Packages).

This third statement would be a reason to seriously consider the "parts of quantum" of information, which would then be a world of infinitely divisible quantities, but then-again. The second combined with Planck's quantization would tell us a lot, both about the "presents" of our surroundings or the "energy" of abstract ideas. And each of the three mentioned are in the fundaments of the "information theory".

Pseudoreal »

Question: Can we communicate with pseudo-reality?

Answer: No. At least not in the usual way of "communication" as we understood it within reality. Yes, say, as in quantum entanglement.

Namely, several options of the present will be realized in one for the new present, and the rest also with the previous state will go into pseudo-realities. Note, what we call the present, or "now," is a kind of quantum entanglement. I have explained this (hypo) thesis in the answers above.

If we could divide the duration of the whole universe into the past and the future with one present, nature would be causally arranged (Dimensions). But because that is not the case, we have as many dimensions of time as space, a new theory of information and objective uncertainty.

The division of all the time of the universe into one past and one present would be possible without movement, or if there were only two systems that, one in relation to the other, move uniformly in a straight line. However, there are many systems in motion in space, and there is gravity within which it is not possible to define a single present of a material point (body) at a given position outside the center of the field.

It has been mentioned above that the sphere of virtual waves of the field, during each step of its expansion, forms one present and is therefore quantum entangled. This is not the case with material points anywhere within the gravitational field. And it is if we could observe their "present" as they "saw" it – in spreading through parallel realities in ways inaccessible to relative observers.

The transfer of information between different parallel realities is impossible, because it would violate the law of conservation. Therefore, when we throw a coin while sitting in an armchair and with the outcome of the "tail" we stay seated, and with the outcome of the "head" we go to another room, it is not possible to be in both rooms after realization, also not being in either.

The movement of matter in space and time is a type of information transfer and communication, while the reactions of quantum entanglement are not such. The transfer of information changes (transfers) energy and the instant of the present changes, while in quantum entanglement the alleged change occurs at (some) same moment that the relative observer (laboratory technician, his equipment) sees as different, and hence the phenomenon itself as a "strange" synchronization.

The problem of interpreting pseudo-reality, as well as many worlds of quantum mechanics (Everett, 1957), or parallel realities, is thus reduced to a deeper understanding of the "present", and at the same time of the "quantum entanglement".

Centroid »

Question: How to broken the simultaneity?

Answer: Communication with a part of the "simultaneous system" of particles will set that part apart from the rest of the system. A little old-fashioned said, interaction with one part breaks the simultaneity of the whole. But let’s step a little further into (perhaps) future interpretations.

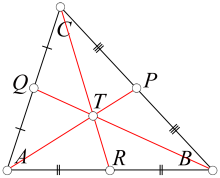

The figure on the left shows an arbitrary triangle ABC with medians AP, BQ and CR, which are the segments between vertices and the middle points of the opposite sides of the triangle. The intersection of the three medians is the center of gravity of the triangle (Centroid), point T.

Triangles ARC and BRC have bases of equal lengths and the same heights, so they have equal surface areas. Similarly, it follows that the triangles ART and BRT should have the same areas, and therefore that the triangles ATC and BTC have the same areas. Continuing, we find the well-known statement that the medians divide a triangle into six triangles of equal area.

This triangle is a model of "simultaneity", and then "quantum conjugation" (Pseudoreal), as well as "parallel realities". It can also be found in Kepler's second law of central constant forces in general. It is about (my) informatic interpretation (Kepler) according to which the radius of the charge vectors in motion sweeps the surface area equivalent to the action of a given force on the charge.

Analogous to the triangle ABC with center of gravity T, the position of the center of gravity interprets the "simultaneity" of some particles in the vertices of the triangle, or in other words, the center of gravity is the place in relation to which the vertices are simultaneous. By moving one of the vertices, but not the other two, this property of the center of gravity is disturbed and the simultaneity is broken.

In a continuation of this conversation, we will discuss various ejections of the center of gravity from the bearing, i.e. those events that could be the causes of disturbing the simultaneity of individual particle systems. In the meantime, let me remind you once again, the application of mathematics in different fields does not imply equality of those fields.

Dependence »

Question: Do you have some other expression for the above "simultaneity" (Centroid)?

Answer: Yes, try to understand this term as "dependence". Namely, such are the simultaneous events from that story, and I will explain that the use of both terms can have unusual deeper meanings.

The first of the common bases will give an expression for surfaces that I call a Commutator. Let Descartes rectangular coordinate system (Oxy) be given with the center at the center of gravity of the upper triangle (T = O). The coordinates of the first vertex are A(Ax, Ay) and still analogous to B and C. Then [A,B] = AxBy - BxAy represents the double surface area of the triangle TAB.

The equality of the areas separating by the medians is equivalent to the equality of the corresponding commutators, and both quantities of communication of the center of gravity T with the sides (vertices) of a given triangle. The same amount of information speaks about the balance of forces of that triangle in its center of gravity.

Another common basis for the terms "simultaneity" and "dependence" within this treatment will be given by the relations of uncertainty of quantum mechanics. In vertices A and B, let them be the operator of the coordinates x and the momentum -iℏ∂x on the wave function ψ. Their commutator is [x, p]ψ = x(-iℏ∂x)ψ - (-iℏ∂x)(xψ) = iℏψ, i.e. uncertainty relation for position and momentum, [x, p] = iℏ, wave-particles along the abscissa (x-axis).

That we get similarly with the operator of time t and energy iℏ∂t we find by direct derivation of the wave function, as above, but also by a simple change of the time coordinate by the fourth x4 = ict space-time. This second way is very much in the spirit of information theory, according to which there should be as many temporal coordinates as there are spatial coordinates, at least six in total. In addition, for one (4-D) real space-time, it is possible to choose any four of them (from 6-D), three of which will be spatial and the fourth temporal, but imaginary spatial.

What these uncertainties say then is not only the non-commutative nature of the process (position-momentum, i.e. time-energy), but also their dependence. For partitial differential not to commute, [∂x, ∂y] ≠ 0, the variables need to be dependent, y = f (x). However, this is exactly what we have in the case of simultaneity leading to quantum entanglement as well as to the 6-D dimensions of space-time.

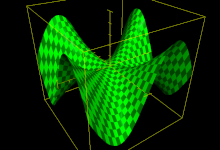

Also, the function at the break point does not have to have the symmetry of second derivatives. For example, in the picture with this answer, such is the asymmetric function in the origin.

So, if in a given triangle we have dependent energies and momentum of a particle in two vertices, we will again have noncommutativity, [E, p] ≠ 0, i.e. some "uncertainty relation". When the uncertainties of the pairs of vertices of a triangle are equal, in relation to its center of gravity, it means that we have a balance of both information and force. Thus, we again come to the dependence of "a pair of gloves" from the description of "phantom action at a distance", or when "people become worried" after reading the same text in the newspaper (Bell).

Tripod »

Question: Is dialectics the only basis of the cosmos?

Answer: Not, if I understood the question. You ask me about the dualism of information perception, or the double helix of DNA, the male-female pair in the reproduction of many species, about the orientation to the left or right, up and down, or a little versus a lot, I guess.

In addition to the black-and-white contrast, there are also red-green-blue triplets with a neutral outcome (gray or white) in a balanced mixture. Or triples of Borromean rings, three simple closed curves in 3-dimensional space that are topologically connected together, and disintegrate into two unconnected and knotless loops, when any of the three is cut or removed (Latent). The restriction to the dualisms only is not in accordance with the principled multiplicity of information theory.

The same is with triangles, maybe better geometrically researched, more precise and perhaps more complex structures than everyday dialectical ones begin, so we avoid them wherever we can, and then we notice them less. The center of gravity of the triangle stands on all three vertices of the triangle, similarly as all three legs need a tripod (trivet) not to overturn. But there are also significant (abstract) connections between doublets and triplets, as well as multiples in general, which are not the topic here.

When x1x2 are of equal weight at the ends, the center of equilibrium is at the arithmetic mean of the coordinates x = (x1 + x2)/2. In the case of projections of the vertex of a triangle on any axis, say abscissa x1, x2 and x3, the projection of the center of gravity will be the corresponding arithmetic mean x = (x1 + x2 + x3)/3. We know that from geometry. I wrote in more detail about the coordinates of significant points of a triangle earlier (Triangulation, or Inscribed circles).

When there are unequal weights at the ends of the same length, masses m1 and m2, then the center of equilibrium is x = (m1x1 + m2x2)/(m1 + m2). In the consistent writing of the masses in the vertices of the triangle, the projection of the center of equilibrium becomes

Spontaneity »

Question: How to recognize "spontaneity" in complex processes?

Answer: Spontaneity is a condition that happens naturally and without planning (I quote the dictionary). If something, be it a reaction or a process, reduces a given free energy, we say that it takes place "spontaneously" (Chemistry 301).

In game theory (Win Lose), spontaneity appears as the opposite of winning strategy. For example, in the tactics of "compromise" (win-win), as the weakest class in competitions, games to win, let's imagine the following situation. Competitor A is always in favor of compromise (good for me and good for others), while opponent B is sometimes not. A wins and B propose a compromise, so A's victory is prevented. If A loses, then B refuses to compromise and A finally loses. So, wishing only the well-being of, A hardly or not at all gains the victory, unlike B which is not like that.

Careful analysis will reveal that opponent B occasionally defies the spontaneous course of events, as opposed to player A who always chooses the easier path, non-challenge, non-resentment. Unspontaneous are the changes that would occur under the effect of intelligence, or beings with excess information (options, actions). Those that would be contrary to the "principle of least action" according to which all known movements of physics take place.

Therefore, artificial products are created by non-spontaneous action. If state changes are "violent", as well as violence in general, we will not be inclined to approve it. This is because the mentioned principle derives from the broader "least information principle", i.e. the "highest probability principle". The latter means that more likely events are more frequent outcomes, and the former means that more informative events are less frequent. Then that our aspiration to calm down, rules and order, inertia, is reduced to the instinct for death.

Spontaneous phenomena are accidental. But bigger coincidences, which are more incredible, carry more force (Action), so the above definition (dictionary) needs to be rewritten, or it should be understood that there are different types of "spontaneity". Coincidences can be tested using probability theory, and the same measures intensity as well. It would the more probably be the more spontaneous, and then it would be less alive, less vital.

Aggressiveness »

Question: Then, is aggressiveness the opposite of the "spontaneity"?

Answer: Yes, this is mostly the case in everyday speech, especially when it comes to the ongoing of living beings. As we behave outside the usual rules of the community, the chances increase that they consider us aggressive.

Unpredictable actions scare us, and that's where the notion of "aggressiveness" in terms of (my) information theory begins. Turning inside, not external problems, will be considered more tame conducting (Information Stories, 1.4 Feminization), but when we dig a little deeper we find that it is a consequence of the "aggressiveness" of uncertainty itself. In the deeper nature of the information of perception there is a force (Action), and it is at the root of various topics of aggression, or as you say "the opposite of spontaneity".

It is no secret that we are terrified of the unknown how unclear the ultimate cause of these fears is. We come to this theory with a step forward. Information is the basic weave of space, time and matter, the ontological basis of the universe, and uncertainty is its essence. Fear of the unknown is not unjustified, simply because it is worse than good for a peaceful world on the other side of unknown options.

Uncertainty also applies to uncertainty, but there is less chance that we can have a spontaneous realization of something favorable than undesirable. Hence our efforts to artificially arrange the environment, in order to reduce the surplus of options in general, but also because of the principled minimalism of information that applies to everyone. Mathematicians, scientists, engineers, all such inventors or legislators who work on discovering and applying order, (un) aware that they are subject to the principle of less information, will gladly say that they are for world peace, security, stability and better living conditions.

At each moment the perceptions of a given subject are a finite set of Packages, but the duration of the universe is infinite and each object will sometimes be a subject of perception, so the number of possibilities is countable infinity. It is the same cardinal number (ℵ0) that have integers or rational numbers (Values). You need to distinguish this infinity from the continuum (𝔠), otherwise a much larger number of "pseudo realities" emerging from one (our) present.

These cardinal numbers (infinities) already tell us how futile our desire for mastering the cosmos, absolute comfort, as well as for stability, security, and even peace in the world is. On the contrary, with fewer options, in our more organized world, we isolate ourselves, reduce ourselves in the universe, but with such a renunciation we never become extremely protected, rather only less vital.

Layers of aggression are like a bulb around us. By removing all unpleasant behaviors, legitimizing ourselves more and more by social rules, the needs of decency, civilization, or something else, we could reach a ban on all behaviors. This is because aggression in general is the opposite of spontaneity, and we strive for the latter, in knowledge or not, consolidating it in order, implying decency, security, comfort, but also efficiency.

Fear »

Question: Can we use information theory to explain fear?

Answer: Yes, that could be an interesting topic. Part of the fears comes from the unknown, the excess option (Aggressiveness), and that is already a big and unknown, completely new story, if we take it out of information theory.

Fear is cured by fear, in short, it is getting used to parts of the fear of the unknown, which is therefore reduced, because repeated "news" is no longer news. The unknown melts with acquaintance. Thus, in the case of wars, this effect makes it possible for participants to become increasingly courageous and easily slide into increasingly terrible types of armed conflict and, in a milder form, delay the cessation of war horrors by getting used to stagnation.

Thanks to the law of information conservation, each of us has some bar of "comfort" in terms of uncertainty. The nausea due to the tension because the difficulties of the problem we would like to solve to the fear of freedom is at the upper limit of that range, the fear of excess options, uncertainty, and vice versa, the feeling of discomfort of boredom to the fear of death are at the bottom.

From the mentioned effect of "habituation", one should distinguish "the power of persuasion", the reasons why it is easier to deceive a person than to convince him that he has been deceived (Dogma). The latter arises from the principle of least information and will intensify the former, the melting of fear by fear. Thirdly, with the excess of information, disinformation arises, above a certain level the more stimuli the less we absorb it, so we become numb to fear.

These are also the initial reasons why evolution had to build its own species protection mechanisms. First of all, embedding the way of knowing that fear is there when we feel it (physical response) so that fear is also the "expectation of pain". Uncertainty is layered, we perceive it differently and physiology can develop in such a way that it remembers "dangerous situations". Progress by simple random attempts and choosing better ones (Monte Carlo method) can then be improved by remembering and choosing better ones in advance.

The layering and relativity of information makes the information known to one subject unknown to another, it allows the hunter to outwit the game, to sort out the "unexpected", as it drives us to communicate because we never have everything we need, about what I wrote many times. Therefore, the fear stimulated by a clear and present threat can be distinguished from the trepidation that appears as fear without a clear cause. This would be a step towards explaining the dependence of anxiety on a person's ability to recognize threats, otherwise still controversial topics in psychology.

Further elaboration of this story would lead us to the interpretation of emotions by physiology and information theory, or who knows where, but I suggest we stop here and take a break.

Stratification »

Question: Insisting on some equalities must always lead to stratification of other inequalities?

Answer: Yes. We all know some easy examples of "unwanted" stratification, but it's a little harder to show that it's a general phenomenon. "Everyone is a genius. But if you judge a fish by its ability to climb a tree, it will live believing that it is stupid." — Einstein said on one occasion. Let's start with this.

The best weightlifters do not have to be the best in a running competition, the fattest man does not have to be the richest. We are aware of the possible differences between us, perhaps the top qualities of rejected individuals in the group, which are not in trend at the moment, but the general question remains open: are there "equal" different entities? We expect that the answer to this question will be "no" as far as living beings are concerned, but information theory is also appropriate in a much broader context.

Due to the inevitable differences of entities that are not "one and the same", the imposition of equality on some important basis will increase the information of such a system and make its maintenance more expensive, or will lead to stratification on some other grounds. Namely, the information of the system of all equally probable outcomes is greater than the information of at least some differently probable ones, and on the other hand, the systems will spontaneously develop into less informative, i.e. more probable.

Complicating legal systems, administration and bureaucracy is a kind of "payment" of insisting on the equality of people before the law, and for example the emergence of a decreasing percentage of owners who have more and more goods and power is a consequence of the free market (Democracy).

I will not repeat the answer in the link, but deepen it a bit. A simple example of a "free network" with three nodes and equal connections, provided that the connection is as small as possible, is not a triangle, but one node with two connections with one on each of the other two nodes. In the case of four nodes, one would be privileged to run three links from it, one to each of the other three nodes. In general, a network of many nodes, each of which is networked with at least one connection, would have equal connections, but unequal nodes. There would be proportionally less equality between nodes, the more complex and efficient the (free) network.

Because of the efficiency of bridging, rare nodes would have many connections as opposed to many poor links. A similar thing happens with the free flow of money, goods and services from which, for the sake of efficiency, less and less of them become proportionally richer. It is similar in the world of power, where the equality of people before the law is assumed, and then in the world of acquaintances where few are very famous, but also in the distribution of the electric network of developed countries that do not want to disenfranchise some places, or the Internet. Mathematical models are universal.

All those networks that are free, efficient and equal in one way, must become unequal in another way. He is a well-known athlete who was not even among the top 200 in the men's competition, but became invincible in the women's competition, when he declared himself a woman using his right after legalization in order to achieve equality between women and men. He led to the absurdity the idea of equality. These are not peculiarities, but regularities. There is a formalism of dilution, the mathematical certainty of increasing the inequality of third properties by insisting on the equality of some, I repeat. At the same time, equality of all characteristics of several individuals is not possible.

However, equal "Information Perceptions" of different subjects on the same object is possible. This is a question of equality of scalar products of different vectors over the same given vector, but it also has some limitations. Consider only the unit vectors of a space (quantum system). If the vectors are two-component (two-dimensional space), then only two vector-subjects are possible for a given vector-object, which will give equal values in the subject-object product (information perceptions). In the case of 3-D space, three subject vectors (Tripod) are possible, and in the case of n-D space, n such vectors are possible.

There are four quantum numbers that describe the position and energy of electrons in an atom, and these are the principal, azimuthal, magnetic, and spin quantum numbers. From that knowledge, and based on Pauli Exclusion Principle, that no two electrons of the same atom can have identical values for all four of their quantum numbers, Mendeleev's Periodic Table of chemical elements was constructed. Let us add, with this new knowledge, that the world of atoms within our very reality exists in 4-D space-time.

Scale-free »

Question: What types of free networks actually occur according to information theory?

Answer: Everything is theoretically possible, but they do not have equal chances and depend on the situation.

Scale-free networks are otherwise graphs specific to equal (peer-to-peer) links and unequal nodes. In the picture on the right is a red "rich" node with seven links, five "poor" with only one, and others.

The probability P(k) of a node that would have k connections is proportional to the degree k-γ, where the γ exponent is dependent on the type of network and usually is the number not much bigger than one.

Examples of such are social networks, including those for collaboration with, say, actors, or co-authorship of works by mathematicians and the like. Then there can be many computer networks, including the Internet and social networks, and financial networks such as interbank payments. Protein-protein interaction networks, semantic relations of concepts, airline networks, distribution of money among people.

The above distribution of probabilities is more often found in a series of sums than isolated as the only degree, so the universality of these networks remains controversial. Moreover, social networks are rarely simple, we say that they are weakly scale free, while the expressions for the probabilities of some technological and biological networks become so complicated that they are almost non-scale-free.

The information theory sheds a new light on these models. All connections are equal, they are equally probable, which means that adding a new one will happen to a node that has more of them, so the richer ones become richer. This is in line with the principle of communication thriftiness of the mentioned theory. If all outcomes are equally probable, the information of the system is maximal and it will spontaneously try to get out of such a state.

On the other hand, the maximum hierarchy with n = 2, 3, 4, ... nodes would be the "dictatorship" of one powerful man once connected with n - 1 wretches, one rich node with n - 1 connection to so many poor people. But it would again have a problem with the principle of scantiness of communication, because such possibly numerous (n → ∞) single-connected nodes would equally likely communicate and contribute to increasing the probability of the system, i.e. its informativeness. This would result in some of their spontaneous Stratification.

The optimum, minimum information or maximum probability, would be somewhere in between. Living systems develop in such a way that consciousness, or the brain itself, does not control all cellular processes like the mentioned dictator. Cells are supplied with their own "life". They can communicate independently but also with the "central". Such maximum efficiency, which is achieved by living beings, information theory predicts, is not or is hardly possible with "dictatorial" consciousness.

Distribution »

Question: How do you calculate information of the distribution in the book "Physical Information"?

Answer: Let's try to understand how Shannon (1948) calculates the average information, then we will compare it with the supplement that makes it "physical".

Information is the logarithm of a number of equally probable possibilities (Hartley, 1928). We continue to work with natural logarithms

In the case of a uniform distribution with n random events of equal chances, the information of a particular outcome is ln n, but the average value of all, the so-called mathematical expectation, has the same value

Let us remind ourselves that Hartley's information is subject to the "law of conservation", and that it is "physical". When each of the n outcomes has m outcomes, for these constant numbers, then we have mn outcomes, each is Hartley information ln mn and the same is amount of average information of the corresponding uniform distribution.

When the probabilities of the distribution are unequal, the sum of them n is one and the average probability is 1/n, so ln n is the average information calculated and is equal to the average uniform. However, the real average is

The real question, then, is where this Shannon information deficit came from or how to interpret it from the standpoint of a new information theory. I looked for the answer in the redefinition of the term "real" average.

Real information is inseparable from "information of perception", and it is a product of "information" of subject and object. In other words, given the same object to different subjects is not equally informative, and that is to the extent that its outcomes are really relative probabilities. This "really" means that "somewhere out there" must be an observer who could consider such another probabilities objective.

Question: How do you think "there is an observer" who sees different probabilities?

Answer: In information theory, parallel realities are acceptable options. That is why we include them in some places, as in this example. Here, primarily because of the relativization of probability and the repetition of random events.

In the event that a lottery player wins a prize, the chances of being able to buy a car, house or similar will increase, compared to the situation when his numbers do not come out. Depending on the accidental event in the box, Schrödinger's cat (The Cat in Box) may turn out to be alive or dead, the continuation of future events is not the same probability, such as whether the cat will jump and break the vase. The future follows from the previous events, and the chances of new choosing depend on those choosing.

Let us now return to the Binomial distribution B(n,p) from the mentioned book. Let n = 1, 2, 3, ... times a (unfair) coin be thrown to which the "head" falls (each time) with probability p, and "tail" with probability q = 1 - p. The question arises as to what is the probability that exactly k = 0, 1, 2, ..., n times will fall "heads" and n - k times "tails". This is a typical Binomial distribution task.

Twice in a row, the fall of the "heads" has a probability of p2, three times p3, and k times pk. In the continuation of n throws, n - k fell "tails" with probability qn-k, so this is single event of probability pk = pkqn-k. I say "single", because with the same probability a different sequence of these k favorable and n - k unfavorable outcomes can be achieved. The number of these sequences is Cnk, which is the number of combinations of choices of k elements from the n-member set.

Thus, in Bernoulli's distribution of k favorable and n - k unfavorable outcomes will be realized with probability Pk = Cnk⋅pk in an event containing many repetitions of combinations. If we ignore these repetitions, the information of an individual event is Ik = -ln pk, and the information of such a "distribution" is Ln = Σk Pk⋅pk. It is "physical" information in the sense that it adheres to the law of Conservation, unlike Shannon's Bernoulli, which gives less value.

This failure of Shannon's information tells us about the existence of a certain latent uncertainty (Emergence) that his formula does not register. It does not see that physical bodies are not only real (Solenoid), that they are not all in one present (light needs to pass from end to end for a while). Bodies are constantly stuck to pseudo-realities, which they can partly pull in relation to the subject, the relative observer.

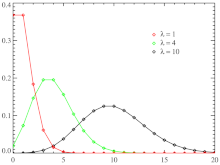

In the mentioned book, the continuation of this theory is as classic as possible, which could be its flaw. You will find that in the case of a low probability p in relation to the number of repetitions n the Bernoulli distribution passes into the Poisson Distribution. In the figure we see three graphs and how they take the form of Gaussian "bell" normal distribution for growing (mathematical) expectations λ = np.

These approximations of Bernoulli's distribution will transfer the deficits of Shannon's information to general cases, each in its own way, but I somewhat ignore them in that book. However, to demonstrate the method of the mentioned approach, I have dealt in more detail with other well-known types of discrete distributions in the same way.

Poisson »

Question: Tell me more about Poisson's distribution?

Answer: Baron Siméon Denis Poisson (1781 - 1840), pictured right, is a French mathematician and physicist who made many contributions to science. The distribution named after him is not only important to us as an approximation of the binomial (Distribution). Here is one of its classic determinants.

Over time, we count events, the passage of cosmic rays, cars, the ringing of telephones. The number of events k in the interval Δt = b - a, from moment a to moment b is homogeneous, its probability does not change by transition the position of the interval [a,b], but only by varying its length Δt. All disjoint intervals, which have no common points, are independent. Finally, all these events are distinguishable, only one of them can happen at a time.

Three preconditions for the application of the Poisson distribution are listed. We call them homogeneity, independence and separability. When all three are met, the probability of recording the event over time is equal

It is known that in the Republic of Srpska during 2020, 9161 live babies were born, which is approximately 25 per day, or an average of 1.05 births per hour. When asked what is the probability of giving birth to k = 0, 1, 2, 3 babies in one hour, we put the mean value λ = 1.05 in the above formula and calculate:

The lower the probability Pk carries the greater information -ln Pk. Since the probability is a real number between 0 and 1, the information with a minus in front of the logarithm is positive. In the best case, when we have two disjoint intervals Δt' and Δt" of equal duration, with expectations λ and λ", the product of the probabilities Pk' and Pk" will give close to the upper Pk with extended parameter almost λ = λ' + λ" and the same number events k. In the very limited conditions we would have the addition of information, for:

Namely, the mean value of all information -Σk Pk ln Pk of one Poisson distribution does not leave the possibility for the above additivity. The law of conservation of information does not apply, which means that, like Shannon's Bernoulli distribution, Poisson's also does not reveal some latent information.

We see in such information that it is, for example, comparable to kinetic energy. Kinetic energy changes with the movement of the body, it does not add up by simply adding speeds, and the law of conservation does not apply to it. But for "kinetic plus potential" energy, the law of conservation is valid.

Triangular »

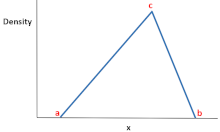

Question: Have you dealt with the "Triangular Distribution"?

Answer: Yes, see the details in Physical Information, p. 57. et seq. The abscissa (x-axis) random variables, and the ordinate (y-axis) the density. When x = c the probability density becomes the highest, and outside the interval [a, b] it disappears, so that the densities form a triangle. We see it on the graph left.

The area of the part of the triangle between the vertical segments x1 and x2, which we write x ∈ [x1, x2], is the probability the number x will take some of the values from the interval. Consistently, the area of a triangle is one, because the choice of a number from the interval [a, b] is a sure event.

Note that the shape of the triangle roughly approximates the "bell-shaped" Gaussian "normal distribution", allowing its asymmetry as a bonus. Also, unlike the bell-shaped distribution, which is otherwise inconvenient for calculations, the triangular one is much easier and not so much imprecise as it is practical for us.

For example, when we organize a company and plan the lowest salary per employee to be a monetary unit, the highest b, and the most workers to have a salary c, where is a ≤ c ≤ b, for the first cost the estimate by triangular distribution will be very good the choice.

Another example, we plan to sell goods from a store. The smallest amount of money sold during the coming week will be a, we believe, the largest will be b, and the most probable c. Again c ∈ [a, b]. As a third example take a voting. The candidate does not expect to receive less than a votes in future elections, no more than b votes, but believes it will be around c votes.

As you can see, there are a large number of applications of the triangular distribution and it is worth getting acquainted with it. If the number x ∈ [a, c] occurs, its density reaches the ascending side of the triangle, and if x ∈ [c, b], its density reaches the descending side of the triangle. The sum of all these densities is the unit area of the triangle, because some of the abscissas from the lower base of the triangle (a ≤ x ≤ b) will surely be chosen.

The semi-product the length of the base (b-a) and the height (h) is the area of the triangle, so from (b-a)h/2 = 1 follows h = 2/(b-a), which is the ordinate of the vertex of the triangle abscissa c. The line through the two points (a, 0) and (c, h) is the ascending side of the triangle, and the descending line is through the (c, h) and (b, 0).

Hence the known probability densities of the numbers a ≤ x ≤ c and c ≤ x ≤ b, before and after their maximum c, respectively:

Uniform distribution information is maximum. It is such with equally probable outcomes and, according to principled minimalism, nature "dislikes" it (Equality) and spontaneously avoids it. The shorter the length of the base b-a, the steeper the sides of the triangle, the more focused the choices become, and the less is information, both the triangular and uniform.

A logarithm is a negative number when its numerus is less than one. Thus, this information itself becomes negative for very small ranges of choice. Moreover, if b - a → 0, then S → -∞, which is inadmissible in classical informatics, but maybe not in mine.

When we extend the theory of information to parts of the Quantum and subtract the uncertainty of the smallest packets of information (Packages), believing that then there are uncertainties left, we would discover inside the whole infinity of information. If this procedure were possible, we would work in the microworld with unlimited "negative" information.

Bell-curved »

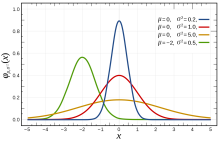

Question: Can you tell me something (new) about the Gaussian distribution?

Answer: Yes, we call it the "normal distribution" (see Quantum Mechanics, p. 76-80), or "bell curve" (Wikipedia) labeled N(μ, σ2). However, many other distributions are bell-shaped (such as the Cauchy, Student's t, and logistic).

The parameter μ is the mean value (random variables x), and σ2 is the variance, or mean square deviation (of the variable x) of μ. This σ is the standard deviation.

Almost everything important about Gauss's distribution is said in these two links, so below I can retell the details to someone who may be more interesting but who belongs more to the newer information theory. Imagine a fluid (gas or liquid) whose molecules move at an average speed μ, with about σ the average deviation from that speed. To begin, let μ = 0 and σ = 1.

In the unit of time (t = 1) and the unit mass (m = 1), the kinetic energy of the molecule (Ek = mv2/2) is half the square of the velocity (v). Hence the action (energy × time) of a molecule, as well as its information, can be written as the square of velocity (v2). The logarithm of probability gives information, and the change of velocity to a random variable (v → x) gives the Gaussian distribution (density) of probabilities φ(x) = exp(-x2/2)/√(2π).

Density is normalized to unity, the exponential function is divided by the constant √(2π), so that the integral (sum) of probabilities at all possible velocities of the molecule is one (certain event). When a fluid flows at some mean velocity μ of molecules and an average scattering of that velocity σ, then we use x → (x - μ)/σ, where the new x becomes a random variable that again corresponds to the velocity. The average value of that velocity is now μ, and its standard deviation is σ. We then normalize the density by dividing it by σ√(2π).

The mean value of the information of the Gaussian distribution is not "entropy", which is its common name in the literature today, but should simply be called "mean information". You can also find this mean value SN = ln[σ√(2πe)] in my book “Physical Information” (Theorem 2.4.16).

About one third of all velocities of molecules (the highest part of the Gaussian bell), from the maximum μ left and right by one σ, make up two thirds of all outcomes. When this band narrows, σ → 0, the bell becomes high and pointed, constantly total unit area below to the abscissa. The previous comment (Triangular) is still valid, about negative mean information – now for very small σ.

Randomness »

Question: How to recognize "randomness" and what can we do with it?

Answer: Randomness can be tested. If we choose the outcomes of tossing a coin, tail or head at will, it is almost certain that we will not successfully simulate real coincidence. It is even harder to achieve randomness with more choices (rolling the dice), or in addition, if you need to follow some more or less correlations.

The problem is the measurement on will of the constant deviation σ from the mean value μ, which, for example, in the case of a Bell-curved distribution is not easy. It must be maintained at the same pace in the case of shorter series and moderately restrained within the broader picture, because the law of large numbers also applies.

This is one of the ways to form randomness tests. We would measure two types of data. Given and theoretical, the latter obtained from the distribution of probabilities. We would get areas of two "bells" that do not partially overlap, and the amount of surplus would tell us about the deviation from chance. We use the "χ2 distribution" in testing in a slightly different way (Statistics).

The task of these measurements is facilitated by the regularities revealed by probability theory. For example, if the random variable is constant (X = c), say if each side of the cube has the same number (c), then the mathematical expectation μ has the same value and the dispersion σ2 does not. Generally:

The random variable of the binomial distribution B(n, p) can also be written in the form Bn = I1 + ... + In, where Ik are indicators (k = 1, 2, ..., n) type the off-on switch, that the k-th event happened. They are mutually independent and with any, but the same distribution. For the arithmetic mean, X̃n = Bn/n, such is Bernoulli's law of large numbers:

The following theorem also holds. If all random variables of a sequence are mutually independent, with the same distribution and final dispersion, then the increase in the number of taken ones decreases the chances that it deviates from some of its mean values through their arithmetic means. The probability of such a deviation tends to zero. This theorem is one of the so-called laws of large numbers; it is neither the most general nor the sharpest among them.

The principle of minimalism of information also here, in its own way, helps to understand the role and power of necessity. As the dispersion decreases, so does the attraction of the "phenomenon" (distribution) – to complete inevitability, i.e. certainty – when the dispersion disappears (σ2 → 0).

Fear of uncertainty is not something that scares only us (Fear), but it also scares nature itself, I paraphrase, nor is calmness, that is, inertia something that only inanimate beings of physics strive for. Due to the law of large numbers, the nature could rejoice in the law of conservation, but again, it would not be in a crowd because of the increase in information.

Equalization »

Question: You write that both concepts, the equalization and simultaneity, are equally opposite to scattering (in a private text). Can you explain that to me?

Answer: When we observe the above-mentioned and analogous distributions of probabilities, the dispersions σ2 around the mean values of μ and on the other hand the quantum entanglement, it becomes clearer. The same law that is repeated always and always (say the law of conservation) at least at first glance seems to us to be the absence of statistical deviations as well as simultaneity.

Equating "legalization" with reducing dispersion (scattering, options) is understandable, but equalizing and reducing the duration of time intervals would save those "localities" because of which physicists once refused to accept quantum entanglement. That's why I "went wild" in that discussion, I tried extreme situations.

This topic is not over, it has just been started together with the questions "what is time" and "what is simultaneity"? The description of the formal equality of "simultaneity" of some fictitious "time", there, with consistent repetition (application) of arbitrary strict regularity (such as 2 + 2 = 4), demonstrates the accuracy, advantage, and perhaps the necessity of such a view of things.

Another question is the connection of such "time" with our usual understanding of time. In his so-called Special theory of relativity (1905), Einstein showed the possibility of simultaneity in a system that moves uniformly inertially. Such would be the places of movement at the speed of light around the given. However, in the General Theory of Relativity (1915) there is no such simple possibility. Let us add to this that the currents of evolution in gravitational fields do not require the whole concept of simultaneity, at least not directly.

If we absolve that the notion of new simultaneity is not contradictory and understand why we still understand it poorly, we notice that science and, before them, mathematics are full of "timeless" claims which we consider "absolute truths". Their dispersions are small, in fact none, as well as development, i.e. duration. Moreover, the actions (energy × time) can be extended to such.

It is the same to say that the validity of a theorem is infinitely long, as it would be to say that it is infinitely short. In the first case, it is information without energy, it is subtle, just as it is omnipresent and without momentum. Again, in the second case, legality is an obstacle of infinite energy that cannot be bypassed by chance.

Locality »

Question: How do you intend to support the idea of "locality" in physics despite quantum entanglement?

Answer: It’s an idea in an attempt. Locality is an area, a neighborhood, while in physics it is the gradual process of spreading action, or information. First of all, by limiting the speed of light (c ≈ 300,000 km/s) in space.

The novelty of information theory is the addition (Dimensions) of time to space. When we are not compressed into only one temporal dimension, then the notion of "simultaneity" becomes looser, additional possibilities of gradual expansion along pseudo-temporal axes open up.

Since its inception in the early 20th century, "abstract" operators of energy (Ẽ = iℏ∂t) and momentum (p̃ = -iℏ∂x) have been widely used in quantum mechanics, while the theory of relativity treats time as a path that light travels for given time. To this "only" we add that time is a kind of road and that there are such "roads" in pseudo reality. In relation to its "distances", events can be local, although we see them differently within one reality.

We see this consistency, the transition of space to time (x → t) and vice versa, with comparative transitions between momentum and energy, everywhere in physics. The difference arises by declaring their classical abstractness into the concreteness of information theory. The momentum px, py and pz of the x, y and z coordinate axes, of time t, obtain duals in energies along new pseudo-temporal (imaginary) axes. Their projections on our time are energies as we know them.

Multiculture »

Question: I like this your explanation of democracy, it is guessing, and it is also unusual, it seems to me. Is it a little unfinished? (a colleague asks me, anonymously until further)

Answer: Yes, you notice well, me is theorizing without underlining, say, the moment of life, the circumstances and the character of the people. I do not list the differential equations of motion, I would add for example, but it is not possible to list everything in one story.

The Western nature has the same as the Eastern one, but in a slightly larger amount: the will to conquer, domination, slavery. This could be an internal need due to which they, before others, absorbed capitalist expansion to corrosivity, the desire for more and more beyond measure. Easterners overestimate statics, Westerners overestimate dynamics, the former balance, nirvana and inner peace, and the latter expansion and new victories, like sharks that would suffocate if stopped moving.

It would be difficult for the pro-Western Inquisition to live in the East, the persecution of witches, or the idea of one will, one religion (Catholic, Islamic, whatever), one nation, and if you want, one ruler as the West imagines. When describing Stalin, Ivan the Terrible, or Genghis Khan, Westerners will exaggerate in atrocities that cannot be confirmed by historical documents, because they reflect their nature on such.

Russia, for example, is a mixture of peoples unthinkable for Western countries. Although Westerners "fantasize" about multiculturalism (again in their possessive style, their own "excellence" and additions), they will not notice that Eastern countries have it more, less assimilate other people's than them, especially that there are no problems between Orthodoxy and Islam as it was or will be in the west. The characteristic of the West, before capitalism (the latter according to Karl Marx), is expansion, development and exaggeration.

We see this in the transgender people who will absurdly "develop" the idea of gender equality, perhaps the use of women in war, although the very idea of female warriors actually came to them from Soviet role models. Women were given the right to vote in 1917 in Russia, in 1920 in the United States, in 1944 in France and in 1971 in Switzerland, I would add. It doesn't matter that the West is late with the ideas of equality, because then it goes further, just as the East is late for Western aggressions, but it usually defends itself.

There is still a lot to say, but I prefer to stick to the theory, those issues that could be relevant after centuries. Non-concrete, dry and hard topics can be interesting to many, but to me maybe a little more than others.

Оbsolescence »

Question: A lie is attractive but weak, you say. How does that relate to uncertainty?

Answer: Insightful question, unusual and interesting. We remember first that all tables of logical operations can be mapped by bijection (True to False and vice versa) into the same set of tables, and then, among other things, tautologies (always correct statements) become contradictions (always incorrect statements) and vice versa.

So, there is an equivalence between the "world of truth" and "world of lies." In addition, all untruths can be deciphered with more or less effort, so that both mentioned worlds can be considered aspects of the same thing. However, what would be provably impossible will not happen, which means that real events, those that science and mathematics deal with, belong to the "world of truth". Such is the nature of information, and then of uncertainty.

Untruth is repressed truth and therefore weak in the collision with truth, but it is attractive because of the principled minimalism of information. When we have established that, we notice that obsolescence versus development behaves like a lie towards the truth, as less towards greater action. Corny, worn out, routine, as well as outdated are expressions of weaker progress, less uncertainty. The aggressive economy will also plan the obsolescence of, say, technical goods, so that the old products the customers can discard on time and as soon as possible, avoiding delays in their production and profits.

We see the strength of youth that overcomes old age in the rise and fall of vitality of various forms of production, associations, civilizations, types of life on earth. That is why Western civilization (Multiculture) may be feverishly clinging to development because it recognizes the power of agility, underestimating the value of consumption, unlike the East, which overestimates stability. The forces of uncertainty should be counted on because of the information of perception (Action), but also because of some long-known phenomena in physics. Those left to themselves, without the opposite tendency of minimalism, would destroy this world.

For example, the flickering of gas molecules in a vessel defines their heat and temperature. It is transmitted by the interactions of molecules so that we can talk about their communication. The greater these interactions, the greater the uncertainty of their oscillation, the more they have to transfer to each other, the greater the pressure that the molecules exert on the walls of the vessel. However, these movements will also use the opportunities and calm down. A lone molecule in a vacuum, in the absence of others, will only eventually radiate energy, losing that uncertainty.