November 2021 (Original ≽)

Growing

Question: Could this be: "Black holes may be growing as the universe expands"?

Answer: Can. The universe is expanding, because the information of the substance (fermions) is slowly merging into the information of space (bosons), there are fewer events and time slows down, the bodies become more inert. I took the picture on the left from that newspaper, and before that in my article (LinkedIn).

Information is the fabric of space, time and matter, and is measured by uncertainty. Because of the latter, because nature more often realizes more probable outcomes, we have a general spontaneous tendency towards less emission of information (greater certainty), and this principle is limited by the law of conservation of information and — the universe lasts. These are the three pillars of my information theory, its principles.

Physical systems spontaneously evolve into less communicative ones, while "avoiding" the law of conservation so that the particles of the substance (on average) are more likely to "decompose" into particles of space, than vice versa. That is why the universe is expanding, there is more and more space, and at the same time we can say that "space remembers" because it is a condenser of information. Due to the same law of conservation, this "stacked memory" in its own way affects the present, but so that the overall intensity of communication is always the same.

We measure time by the outcomes of random events. As the substance that could "melt" is less and less, it is less likely that the deceleration of time accelerates, but it is possible that the speed of light is less and less. Therefore, the integral calculus will show that the (average) speed of galaxies moving away from us is not simply proportional to their distance, but that they accelerate.

This information theory is also harmonized with thermodynamics, because it implies that the spontaneous increase in the entropy of a given system decreases its information, and that the known laws of entropy do not apply to space. It is harmonized with all other branches of physics in which the "principle of least action" applies, because this is a special case of the "principle of least information". Finally, the (hypo) thesis from the news stated in the question is in line with it, that the masses of black holes are increasing with the very duration of the universe.

Question: Can you say something else about this that you have never done before?

Answer: Yes, of course, there is always something new to say, there is a lot more which is not said then what is presented to the public. Such is the nature of research. For example, you could declare that the world we live in was created only once, and that everything we do next is just recreation. That it is not possible to create anything essentially new in the universe, which has never happened before.

However, from what has been said about information theory, we see that this thesis needs to be reconsidered. From the very initial idea that uncertainty is the essence of information, and that information is the basic tissue of the universe visible to us, there is a doubt in the claim that everything we need could be given in advance. Unless we doubt the objectivity of the uncertainty.

This doubt is then reinforced by the derived thesis about the creation of natural laws by the duration of the universe. Due to the spontaneous flow of physical states into less informative, i.e. more or denser regulated, it follows that from "pure possibilities" (phenomena that never were) certainties (new realities) are created.

In that sense, the universe is still in the creation phase, and I don't think anyone before me has ever (publicly) said that in that way.

Question: Is the universe in a constant state of creation?

Answer: Yes, it goes in the package of "objectivity of uncertainty". When you think a little deeper about what the alleged objective uncertainty could be, and if it concerns the universe in which we live, you will notice that it is the easiest to understand as I say, that unpredictability is both relative and objective.

Moreover, with all the local periodicity, the global picture is constantly changing (Multiplicities). There are no two identical 4-D universes at two different times, including all those additional dimensions of time that physics does not know today.

When a hunter hunts prey and devises traps, his plan to prey is uncertain, the cunning man is unpredictable to the victim. Also, if you measure the weight of an object given to you before you inform the client, or until the discovery of a mathematical theorem and its first proof when you live without adequate knowledge of the required assumption.

Uncertainty is so layered and omnipresent in its own way that it forces us to communicate, because without information, the existence is not possible, and it is impossible to know everything. Physical particles, bodies, living beings, interact because of it. Hence the multiplicity of this world.

On the other hand, when we go into details, we come to "pure uncertainty" in the form of a quantum of action, which I wrote about earlier (e.g. Information Stories, 1.14 Emmy Noether) as the inevitable non-zero minimum amount of "free information".

However, the quantum also has parts (position, speed, frequency, etc.), but because by removing the part of uncertainty we get certainty, it (the least physical action) will not be the end of the story of information.

Thermal bath »

Question: Could this be (New theory), that "dark matter came from regular matter"?

Answer: It is a step closer to the truth, that dark matter is actually the gravitational action of regular matter, from the past to the present. This is if (my) "information theory" is correct, or especially if "space remembers" in such a way that it can act from the past to the present.

Question: How do you prove that space remembers and acts as you say?

Answer: In many ways. For example, by rotating the perihelion of the Mercury ellipse in orbit around the Sun, or (similarly) by observing the "irregular" way of moving the matter around the center of galaxies (their central black holes). By the way, any the gravitational action of mass, at the speed of light (300,000 km/s) is always the action from the past to the present. Read also my recent answer (Dark Matter).

I wrote a little more about this, and still quite understandably (popularly), in the volume "Information Stories", in the title "3.30 Delayed Gravity".

There is also evidence coming from completely different sides, for example from the vacuum properties of quantum mechanics. In classical physics, vacuum was considered the absence of matter, light and energy. In quantum physics, the vacuum is not so empty. It is filled with photons that fluctuate in and out of existence. So, it says in the text about a recent experiment that supposedly produces something out of nothing (Something From Nothing), but which actually proves the fullness of the space.

Mass

Question: How does your "information theory" explain mass?

Answer: Just like inertia, using the principle of information minimalism. In "Notes I", I dealt with it again. Information has a potential (3. Potential Information, p. 13-16) that can be reduced to Kepler's second law: the radius vector from the Sun to the planet erases equal surfaces area at equal times. This can then be generalized, so that it is applied to all central forces in general.

Hence, we can speak of the "energy of information" (4. Information Energy, p. 17-20), which is, on the other hand, in line with my earlier thesis that the smallest amount of action (quantum) contains the least amount of "free" information. The "unfree" ones would be like position, moment and other fragments, the concepts that make up quanta. Such are the so-called universal truths, e.g. we know from math.

I state this to underline the earlier thesis that the mass has an entity (particle) which has its own time, with the flow unlike in photon, that additionally "gets stuck" in space-time, consistent with the principled information minimalism. It turns out that this is a mechanism analogous to the Higgs mechanism (Higgs boson), described long before me by mass formation and recently confirmed at CERN, but where the principle of information is not mentioned.

Question: You write about the alleged energy of information, does that mean that it is not real?

Answer: No, on the contrary, the notion of information is broader than physical energy. We can understand information equally, say, through game theory, and not only through modern mechanics (quantum and relativistic).

For example, consider uncertainties that could interfere with Neumann's minimax theorem (Capablanca), which would arise due to lack of time. With enough time to consider all the options, some open game for which Neumann's strategy applies, we choose the move that leaves the opponent the worst opportunity to respond. But with the complexity of the game, the time required increases when we work by heart and deal with estimates.

Imagine the total uncertainty of one stroke of a given Neumann game as the area of a circle of unit radius. The allowed time for move (t) covers a part of the maximum circle with a concentric circle of smaller radius (r < 1). Greater given time for thinking leaves less uncertainty (η) and, if we compare this with Heisenberg's relations of uncertainty, their product will be a constant (τ = ηt) like a quantum of action.

The lower the uncertainty, the less information we can get from it, the fewer events, outcomes, and the slower the passage of time. I remind you that in information theory, I interpret time as the realization of random events. If we compare this (t = τ/η) with Einstein's relativity, we find that the mentioned constant (τ) is its proper (own) time, and the unknown part of the game (η) is inversely proportional to the Lorentz gamma coefficient (γ = 1/η).

In a recent answer (Action) you will find that with higher S = ax + by + cz + ..., i.e. with higher "information of perception", there is a greater force, physical force, which is then a confirmation of the correctness of the definition of S, but also these stories themselves.

Ruler

Question: Who rules the universe?

Answer: That is one of the questions I have been asked about God (Religion), but I will make it aware of the question of the Free will. More precisely, onto the information whose essence is uncertainty that contains energy.

Namely, what we call "destiny" also governs our lives, and other lives and other phenomena depend on our decisions. If we could know the correct answers to all questions, that is, really control our destiny to the end, not only would we know all the laws of the universe, have insight into all its outcomes, but also actively participate in all its phenomena. We would then be the rulers of the universe.

In other words, to the extent that we have control over the "uncertainty" around us, we also have control over the universe. We have as much energy as we can manage physical phenomena, so the amount of uncertainty, i.e. information boils down to energy consumption.

In order for the mentioned "information" to be exactly the one from the answer about the physical action (Action), in a series of questions about the "information of perception", we see from the calculation that the greater there carries greater physical force. We also recognize a similar connection, energy and information, in my article on Quantum calculus.

So, the one who would manage the universe would have to have the energy, that is, the force that all the universe has in total. In the ancient sense, he would rule the "cosmos" (Cosmos) as much as he would rule the "chaos", and in the modern sense he would have as much energy as he could not have as separated.

Question: You didn't answer my main question, about religion?

Answer: Well, I don't intend to. But notice that mathematics itself is a kind of dogma, admittedly truthfully indisputable but equally unprovable (Gödel’s Incompleteness Theorems). The situation with physics is no better, which has its own "logical" closed system that relies only on material measurements, on physical experiments beyond whose power anything will not accept it as "reality".

There are also philosophical systems with a strong enough internal "logic", often unacceptable to scientists and mathematicians, but which can be respected as a kind of "perfect" system of thinking, not always in terms of mathematical or physical logic, but irrefutability within their own methods. Not far from them is the "Theory of Stupidity".

Information theory encompasses many of these. I wrote, what is possible to prove that it cannot happen — will not happen. In other words, what could happen is true. A lie is a hidden truth, and the dichotomy is unreal at all (Information Stories, 3.7 Dualism Lies, 3.20 Dichotomy). I mean, the dogmas of the universe overlap.

Science reveals love as a chemistry (Why love really is a drug), but also the bipolar behavior of schizophrenics, such as voices in the head, or unreal representations, disorders related to the senses (listening to colors), prove to be physical. Information theory treats such phenomena as "reality", as a type of information, and it considers its position to be an extension of the scientific understanding of reality.

Question: If by knowing the universe you become a universe, then how are different understandings of the same possible?

Answer: Exactly. Knowing and mastering its various aspects implies the adoption of more and more of them. It is not just one version, one system of mathematical truths, measurable physical substances, dogmas, insight without action, but "all that" and always more than that.

Meaning

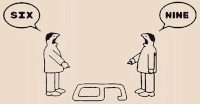

Question: What do you mean by "ambiguity of meaning" in exact science?

Answer: In the picture on the left, while one man sees a six, the other sees a nine, and both are right. There are many examples of ambiguity in literature and art, but we rarely transfer them to physics, and even less often to mathematics. However, for a theory that claims to consider information as the fabric of the cosmos, the transfer of "meaning" to "physics" is inevitable.

A deeper example of the "ambiguity of meaning" is the intersection of a cone and a plane, which, as we know, is an ellipse, with the corresponding equation from analytical geometry (x2/a2 + y2/b2 = 1). In general, such examples are all theorems that can be proved in different areas of mathematics, and which we consider to be the same statements in different domains.

Communication is also an example of changing perceptions of the world around us. If we were the same before and after receiving the information, the information we allegedly obtain would be unreal. It’s the same with interactions. They, the physical particles, then change their paths, making the role given to them in the world different, that is, "considering" the world different.

Each, supposedly the same object, before and after the interaction of a given subject, will "look" different to it. Not to mention then the appearance of such an object for different subjects. It is a novelty that "information of perception" brings to physics, its inseparability of subject and object, that the difference in understanding the world is a reality and that mathematics itself supports this, for now still an idea difficult to accept.

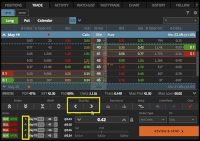

Unitary

Question: How can you present theses about nebulae through something exactly like mathematics, such as "the same phenomenon for two subjects is not the same"? It is known that if "2 + 2 = 4" is for one, then it is not three or five for someone else, right?

Answer: Okay, I'll explain. We represent a process, say the quantum evolution of quantum states, by mapping U: x --> y, where the unitary operator U maps the first state x to the second y. The same mapping is written U(x) = y, or as a multiplication Ux = y of the first vector (x) by a matrix or operator (U) to obtain the second vector (y). The image is from "„The Philosophy", Tim Maudlin.

These so-called "Unitary" operators are linear, reversible (invertible), and almost self-inverse (involutive). Linearity means that A(αx+βy) = αAx + βAy for each pair of scalars α and β, reversibility that for A there exists a corresponding inverse (unitary) operator A-1 such that x = A-1y.

The full involutivity would be AA = I, where I is a unit, identical operator (which does not change state), but mostly this second factor of unitarians is A†, a conjugated and transposed operator A, because the original contains conjugated complex coefficients. These are elements of the mathematics of quantum mechanics.

However, unit operators (which, of course, are unitary), can always (in different ways) be written as products of unitary operators, say I = AB. Also, it is always possible to further decompose (any) unitary operators into unitary factors, A = CD. Thus, the constant state for one observer, Iy = y, becomes something else for another observer, Iy = ABy =Ax.

The math is okay. Thus, the decomposition (Components) of information enters into information theory. Namely, since the norm of a unitary operator is unit, |U| = 1, in fact it is their definition, that the norm of the processed state remains unchanged, |y| = |Ux| = |U||x| = |x|, which means that the law of conservation also remains.

Now, due to the "objectivity of uncertainty", as soon as the two subjects are different, they have different points of view, but also different perceptions of "the same" object. This difference comes from the very limited speed of light, and then from the relativistic effects of, say, the slowed-down time flow of a moving object.

Different

Question: Can you give me a physical phenomenon where two people see the same "physics" differently?

Answer: Yes. Let's say I'm looking at you and you're looking at yourself. You are at least a meter, or meters away from me, so your light until it reaches me (speed c = 300,000 km/s) time passes so that my and your vision are not observations of the same you. I look at your past, you look at the present. And since there is an objective coincidence, then you in your past have never been "exactly the one" I saw "you" in the past.

Moreover, you are the same when you look at yourself from head to toe — you do not see yourself in the same, at least what, present. Namely, until the light reaches from the heel to the head, some time will pass — enough that the head and the heel are not in the same time.

Question: When is the perception information invariant?

Answer: That's a good question. Just as the body's proper (own) energy in the coordinate system in which it is located differs from the relative energy of that body observed in motion, so we distinguish the information of perception of different observers.

However, for the same observer (coordinate system) consistency applies, a moving body will have more energy for the kinetic energy added to it for its motion, and we have something similar with information.

We express the consistency of a given observer by the product of as well as between variant vectors (Notes II, Variant vectors). In the image of the oblique Cartesian system we see the vertical projections A(x',y') and the parallel projections A(x,y) of the same point on the abscissa and the ordinate. We call the former covariant and the latter counter variant coordinates.

The product of the co and counter-variant coordinates is invariant (does not change) by changing the coordinate system and this property is an expression of the law of conservation, both in the case of energy and in the case of the coupled information. It expresses the intensity, in this second case the "self-information", the coupling of information as a state and as a process.

Values

Question: Information is a number?

Answer: Yes, everything revolves around numbers, and more broadly it is a quantity (scalar, vector, tensor). For example, for Hartley's information (Physical information), the logarithm H = logb N of the number N = 1, 2, 3, ... equally probable outcomes, we can say the information is the length of the record of N numbers. The base of logarithm b is then also the base of the number system.

In a binary system, b = 2, say with three digits, zeros or ones, it is possible to write 23 = 8 different numbers, from zero to eight that are in order: 000, 001, 010, 011, 100, 101, 110 and 111. In general, with H digits in base b it is possible to write N = bH numbers.

Question: What sizes can be reached with that?

Answer: We extend this idea to cardinals, infinite numbers (Continuum). If we knew that there is a continuum, at least of pseudo-real, possibilities, then our reality would be one information that can be understood as a countably infinite set of events, cardinality ℵ0 (aleph-zero), which is realized in a discrete sequence of outcomes. Formally, for the continuum we then write 𝔠 = 2ℵ0 binary, or ℵ0 = log2 𝔠 bits.

But effectively, if the reality of an individual subject consists of (countably) an infinite series of events, cardinality ℵ0, within which there is an infinite subset of events that all have the alternatives (they are random), then the total cardinality of the alternatives is 𝔠. There is a continuum of them (un) realized (Waves).

Question: Is countable infinity the maximum?

Answer: No, we notice that it is possible to join a subject to each of our alternative realizations, that each type is a possible "observer", and that our reality itself is a kind of continuum supplemented by them. This is a consequence of the objectivity of uncertainty and the answer of "information theory" to the dilemma of today's physics "is space-time a continuum" (Discrete or Continuous).

If our reality consisted of the most countable possibilities, the realization of each could be realistic for the individual, and his alleged world could be deterministically arranged. For them, there would be no principled objectivity of uncertainty.

Question: What, then, is greater for us than countable infinity?

Answer: It is further possible that each of these, immediate alternatives, has its own alternatives or realities, so in fact the world of pseudo-reality is much larger. It is greater in the sense that it has possibilities that are not present in our first, immediate alternatives, but that does not mean that it is greater in cardinality. Space-time of parallel realities is at least a six-dimensional continuum, but even such a thing does not make the whole cosmos.

Namely, if our reality, otherwise a 3D the present that changes over time, forming a 4D trajectory of space-time events, were like a vehicle that, with a dose of chance, moves through 6D parallel realities, as through a static container — then we could bypass Heisenberg's relations (Infinity), at least theoretically. However, they are a consequence of the noncommutativity of the quantum mechanics operator, so we would have to reject the mathematical setting of quantum mechanics, or deny the algebra itself, and we will not do that.

Question: Okay, and what are the other alleged sizes?

Answer: A special line of spreading the value of information is through "Information Perceptions", to which I often return, not only in this blog. For now, not to be boring, I just remind you that we represent quantum mechanical processes by unitary, linear operators, say A, so that the equation Ax = y, or mapping A: x --> y, represents the evolution of the quantum state x into the quantum state y. Decomposition A = BC is always possible, where B and C are also unitary operators that can represent quantum changes.

In other words, although subject to the law of conservation and discreet (Emmy Noether) any information can be decomposed into (consist of) other information. This paradoxical situation is resolved by the very nature of information, its power in uncertainty. More information means more uncertainty, so the world consists of the individuals who are themselves quantized (packets).

Part of such an elementary package of information must contain less information. It must have less uncertainty, which means there is more certainty. Smaller parts of elements (atoms) of chance are more and more causals. This can be reconciled with the very law of information (uncertainty) conservation, but due to problems with discretion (packages), I assume that going deeper into the microworld, this theory distinguishes "free" from "latent" information.

Observable

Question: What are observables?

Answer: Observables are measurable physical quantities, such as mass, speed, place, time. If we join coordinates to them, then the vectors of that system become physical states. This is exactly the usual, not to say the only method, of theoretical work in quantum mechanics. Consistently, the projections of the vectors on the coordinate axes are the representations of the measurements.

For example, in Cartesian rectangular coordinate system Oxyz, let the vector a = (ax, ay,az) be given. Let us denote it by a = |a| intensity (length), so that the square of that number is a2 = ax2 + ay2 + az2. That said, the lengths of the projections are ax = a cos α, ay = a cos β and az = a cos γ, where α, β and γ are the angles of the vector towards to the x, y and z coordinate axes. If we substitute these values in the upper square, we get cos2 α + cos2 β + cos2 γ = 1.

In other words, if we calculate the probabilities of finding individual observables from the projections, and the system contains them all, then the square of an individual projection is the probability of finding a single observable of a given state and measurement procedure. By symbols, P(ξ) = |aξ|2, for ξ ∈ {x, y, z} respectively, and also P(x) + P(y) + P(z) = 1. This is a convincing proof that you can find in the book "Quantum Mechanics" entitled "1.1.6 Born rule".

Consistent with this, we interpret the Information Perception S. Then we have "simultaneous projections" of a given vector on a vector b = (bx,by,bz), intensity b = |b| whose square is also the sum of the squares of its components, so that S = axbx + ayby + azbz. The information of the perception is the measure of the "strength of the coupling" of the two vectors.

Because of Schwartz's inequality |S| ≤ |a||b|, in the mentioned book (1.312), will be |S| ≤ 1, which opens the possibility to interpret the information of perception as "probability of coupling". The larger number (S) corresponds to a more certain association of states represented by vectors (a, b) which corresponds to the "probability principle" (more probable outcomes are more common), so the question arises where there is the "classical information" and "information principle" (nature saves information)?

We will keep the form of the above descriptions when we move on to complex numbers. In the volume "Notes III" you will find a more decisive treatment of them, at least as far as the physics of "information theory" is concerned. Pay attention there to the "commutators" and the surface area they represent.

We notice one of the additions (k = 1, 2, ..., n) of a slightly more general expression of information perception S = a1b1 + a2b2 + ... + anbn. Let its factors be complex numbers ak = Ax + iAy and bk = Bx + iBy, where i2 = -1 holds for the imaginary unit, while Ax, ..., By are real. We present these two components in an apart, complex plane.

For modules, as well as for these complex numbers themselves, the equations apply:

We conjugated the first complex number (A) because we are looking for a product analogous to co- and counter-variant vectors. Based on the answer to the previous question (Different), we expect that such is invariant, that (intensity A*B) does not change by changing the coordinate system.

In the expression we have thus obtained, the first addition called the "scalar" product (A ⋅ B = AxBx + AyBy) will be interpreted by the "probability" of the coupling of the given complex numbers (given two components of perception information). The second item, which we called the "vector" product, otherwise the commutator [A, B] = AxBy - AyBx, will be interpreted by the "information" of the given coupling.

Area

Question: What do you mean by the "surface area is the information"?

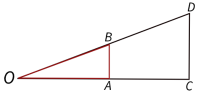

Answer: Deeper than it seems at first glance. For example, in the pictures like one on the left, we are used to seeing two similar triangles OAB and OCD. They have equal angles and proportional corresponding (versus the same angles) legs.

However, we are not used to noticing one interesting consequence of that proportion, which is an example of the two-dimensionality of information. When we observe an object moving away from a given point, it becomes smaller and smaller and we see less and less of it. The angle at which we see it decreases, so that what we see projected on the same plane would be smaller and smaller, but the length of the projection multiplied by the actual distance would be constant.

That's the thing. Our information about and around the object would be constant, but more and more of it would fall on the space in depth between us and the object, and less and less on the object itself. This is a consequence of the above proportion and the fact that the area of the triangle is equal to the semi-product of the base and the height.

Another example is from the volume "Notes II", entitled "10. Quantity of Options ", subtitle "Action" on page 55. A straight line (l) is given along which a point (A --> B) slides at a constant speed, which we observe from the origin (O) in equal time intervals (AB = d). The distance (h) of the line from the origin is constant, so the area of the observation triangle (OAB) is constant (hd/2).

From the knowledge that the force is greater the greater the uncertainty that makes up the information (Action) and, of course, the assumed universality of information, follows the interpretation that uniform rectilinear motion is a consequence of constancy of information, i.e. constancy of the surface area of the observed triangle in given conditions (absence of other forces).

In the same picture of the mentioned volume, it was noticed that the generalized said rule becomes Kepler's second law. The planet moves around the sun so that its radius vector (segment from the sun to the planet) overwrites the same areas at equal times. Moreover, it proves there that it is a property of all constant central forces.

In short, the surface is information, and information is also a force, so we can study the theory of the forces of physics with the help of geometry. However, this is not the only possible interpretation of either the information or the surface.

Question: Is this thesis about "surface information" consistent with the theory of relativity?

Answer: Of course. In special relativity, the relative lengths (only) in the direction of movement are shortened in proportion to the so-called Lorentz coefficient gamma, and time slows down (Space-Time, 1.1.7 Special relativity). The area we are talking about is shrinking by the same size, and the relative lack of time is the deficit of the reality of the moving system, the loss of its own (proper) perceived "world of events" which is so present in the parallel reality.

It is similar in general theory. Gravity bends space-time (an arbitrary fixed observer) so that in a stronger field it is more absent for the relative (outside the field) and so much for it in parallel reality. The given information of a given place is there and is beyond the scope of the relative observation.

I remind you that "parallel realities" are implied in "information theory" as much as "objectivity of chance". That's why I avoid discussing these topics with ignoramus on forums.

Concentric

Question: Can you be more specific about that "surface of information"?

Answer: Okay, I hope. Observe the waves of the water surface moving away in concentric circles from the place where it was hit by a pebble, as in the picture on the left. Their speed is the same and, notice, the wavelengths do not change while the amplitudes decrease.

The distance between adjacent concentric circles is determined by the wavelength and it does not change with the propagation of the wave, but the surface of the circular band increases in proportion to the radius, exactly as the amplitude of the water decreases. We should now pay attention to this otherwise new remark, which we do not notice when learning wave mechanics.

The circular wave becomes flatter, we will say by consuming energy by friction on layers of water, becoming weaker at a greater distance, less noticeable, less specific efficiency (per unit area) — until it disappears, in a larger ring of constant width, but so that its total surface area unevenness does not grow.

Imagine then that this uneven surface, which aligns inside the ring of a propagating water wave, is equivalent to the action, or information, that the wave is trying to store (due to the law of conservation). And that example, I hope, is more concrete than the previous triangles (Area).

The example before me, which introduces the idea of two-dimensional information, is related to black holes (see also my comment on the origin of the Big Bang universe) and Hawking's famous paradox according to which they "eat information". Namely, a body that collapses into this strong gravitational field, looking from he outside, time flows more slowly and radial units of length shorten and disappear, while from the point of view of the body itself it stays outside for a short time and quickly finds itself inside a black hole.

For the relative observer, the body flattens over the surface of the sphere around the black hole becoming a new layer of the event horizon, the boundary beyond which nothing can come out of the black hole including light. In this way, all the information of the body remains outside, the law of its conservation remains, and its two-dimensionality comes to light.

Commutator

Question: What do "commutators" have to do with information?

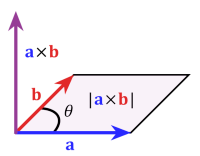

Answer: First of all, the commutator

The commutator of two vectors is the intensity of their vector (cross) product, illustrated in the figure, and therefore the commutator is also the surface area of the parallelogram that the vectors form. Hence the commutator is a type of information (Area). However, in addition to these "oriented segments", vectors are also linear operators.

In quantum mechanics, a vector that is a series of "ordinary" coordinates is called a quantum "state", and the (unitary) operator that acts on it (changes it) is called a quantum "process", or quantum evolution. States and processes are dual phenomena of microworld physics just as are vectors with operators whose representations they are.

The commutator of commutative operators is zero, disappears, which can be seen from its very definition:

For example, if A: x --> 2x, double the number, and B: x --> x + 3, add the number three, then the composition give AB: x --> x + 3 --> 2x + 6, and BA: x --> 2x --> 2x + 3, so these operators are not commutative and especially [A,B](x) = 3 for each x and we can write [A,B] = 3. A similar example are quantum operators, as an operator of position and momentum, i.e. time and energy whose commutators are (of the order of magnitude) Planck's constants — with "surprisingly" accurate interpretations of wave mechanics (Wave-particle).

Combining such interpretations, we get an insight into the equivalence of information with physical action too. Information and action are of the same physical dimensions, because the commutator of quantum operators is such (Planck's constant).

Rotation

Question: Can you tell me the derivation formulas for rotation? I find these transformations everywhere, but nowhere and their proof. What do they mean in information theory?

Answer: See the proof in my book "Quantum Mechanics", Example 1.1.62. on page 87. It's a classic. You will find an unusual way to do the same in the recent book "Action of Information", entitled "2.5.5 Rotations". Perhaps the great novelty is not the explanation there that physical transformations (to which the law of conservation applies) can be reduced to rotations, as much as the use of "commutators" to derive the required formulas:

with the above marks (Commutator), where the rotation a --> b for the angle θ provided a = b. For control, multiply the first equation by by and the second by bx, then after subtraction, because:

get the identity 0 = ab cos θ sin θ - ab sin θ cos θ.

However, the proof of the rotation formula using the “commutator” should come as no surprise given that we know that multiplying by a complex number of arguments θ represents the rotation for that angle. It is greater than that, that the "commutator" represents the area, information and action, that the information is represented by the surface (Area), and that Heisenberg's relations of uncertainty speak of the "excess" of the area, i.e. information and action.

Namely, if A and B are quantum (unitary) operators, then the function of their commutator, [A,B](x) = ABx - BAx to the state x, tells us about the commutativity of the processes (operations) to which a given quantum state is subjected. These processes can again be represented by surfaces, so that in the case of non-commutativity, [A,B] ≠ 0, the variable via B through A will describe a different arc above the observable (axis) than going first via A and then B. Representation of the area difference is a commutator, that is, an action.

Unitary operators, as we know, are unit and therefore also some rotations. They are also surfaces, just like the angles can be. In that sense, the rotation of the first vector into the second for a given angle is the area overlapped by two vectors. But that is already a topic beyond the questions you ask me.

Tsunami »

Question: I have always been confused by the synchronization of natural phenomena and it seems to me that "information theory" could help a little. Would it?

Answer: Simply put, synchronization is harmonization events in the system, doing two or more things at the same time, setting clocks to display the same, or an activity that will leave files equal in multiple places. But from the point of view of my broader concept, synchronization is a deeper phenomenon.

Gravitational acceleration is the same for tectonic plates, as well as the principle of minimalism of information in general, but they move differently due to "Multiplicities", also a fundamental feature of the world in which we live. The plates are pressing each other and sometimes bounce off causing an earthquake.

A stronger earthquake under the ocean will trigger a huge water wave, a tsunami, which we can understand as a series of chain reactions led by principled minimalism, the law of conservation and the ubiquity of information. The particles of water compressed in a big wave do not have much choice besides handing over their freedoms to the collective, which travels thousands of kilometers unhindered. In contact with diversity, shallow or coastal, water organization changes character. When particles increase the chances of losing vitality, they use those opportunities.

Synchronization is also the harmonization of individuals of the social collective, that we call civilization, which in the absence of external disturbances can also endure. What breaks civilization is "trouble", in fact the opening of other possibilities for individuals to surrender their freedoms, to get rid of them, which then again means faster or slower synchronization in some other associations. And they are guided by principled minimalism, the law of conservation, and the ubiquity of information.

In the book "Physical Information", I demonstrated how it is possible to rearrange Shannon's definition in order to obtain information to which the law of conservation applies. There you will find that from Shannon to Physical Information there is little difference, latent information that is ready to unite a given system into a wider collective, and it will happen when the coupling, the information of perception, is favorable.

Some of that, and in fact the second step in answering the above question, is in the book "Minimalism of Information", in which I dealt more with the mentioned need for relaxation, inertia, i.e. the principle of least action. The third part, the ubiquity of information in the physical world, arises, for example, from the equivalence of information and action, which is the predominant theme of the book "Action of Information".

All in all, as you can see, the better answer to the question posed to me goes beyond the scope of one short correspondence.

Farming

Question: Knowledge is information supplied with meaning?

Answer: An interesting question, a good basis for an "unexpected" answer. Routinely, information is a measure, a quantity of data, so "data" and "information" are the essence and number, respectively. But what if each "essence" is expressed by bijection (mutual unambiguous mapping) with some set of numbers, so the information is the size of some other quantities which are again measures of some third-party information. In short, this question opens up a topic that is not as simple as it seems. Consider it with examples.

On a laying hen farm, some statistician wrote that a hen lays an egg a day and a half, and we wonder how many eggs one hen lay in one day. Without rushing to the conclusion, we will notice that twice as many hens lay twice as many eggs in the same number of days, which here means that three hens lay three eggs in a day and a half. One third of these hens lay one third of the eggs at the same time, i.e. one hen for a day and a half give one egg. In twice the time, in three days, the hen will lay two eggs, and then in a third of the time, in one day, two thirds of the eggs.

| Hen | Day | Egg |

|---|---|---|

So, the carrying capacity of this farm is 2/3 of eggs, or 66.7 percent, per hen per day. We can reach the same result formally, by calculating the proportions. The number of hens, the number of days and the number of eggs are directly proportional in size, so we can write x:1,5 = (1:1.5)⋅(1:1.5), whence the same result, the daily carrying capacity of the hen is x = 1:1,5 = 2/3 eggs.

This was the usual calculation of proportions from high school, and below we reduce it to the calculation of "areas". Namely, some (numbers) of hens are given in the order of abscissa and ordinate of some (affine) coordinate system. When we divide the number of eggs by such an area, we get the "specific density" of eggs. In the given case, the "density" is (1.5 eggs)/[(1.5 hens)⋅(1.5 days)], and in the required being (x eggs)/[(1 hen)⋅(1 day)], so by equalizing the "density" we get the upper proportion, and hence the earlier result x = 2/3.

The point of this story will be closer when you look at the examples from my script "Mathematics I" (Example 6.3.3. P. 42), or at least the following. A beam 3 meters long, 20 centimeters wide, 100 millimeters thick costs 2000 dinars. How much will a beam 4 m long, 30 cm wide and 110 mm thick cost?

Calculating the volume, by equalizing the specific price, dinars per unit density, we form the equality x/(4⋅30⋅110) = 2000/(3⋅20⋅100), and hence we notice the proportion x:2000 = (4:3)(30:20)(110:100), which agrees with the conclusion that the price, length, width and the thickness of the board are directly proportional values. Anyway, the result is x = 4400 dinars.

When calculating the number of bricks in a wall, we can multiply the number of bricks in one row by the number of rows. A meticulous "physicist" could still say, don't mix apples and oranges (chalk and cheese), but measure correctly — by the same units. Measure the width and height of the wall, then the width and height of one brick, the surfaces are the products of width and height, so divide the surface of the wall by the area of the brick. That quotient is the number of bricks in the wall.

The lesson is that we may exaggerate with the mentioned "units of measures", that we give meaning where it is not necessary. Perhaps we are mistaken in the very assumption that there is a unique, incomprehensible, deeper meaning of the terms we think about, so that "data" can never be the same as "information".

That's the way it is in information theory, I believe. Concepts gain meaning only by combining subject and object (Components), in such a way that they do not have a single meaning, they do not have some absolute universal and for all the same value. This means that by assuming the exact meaning of "this and that", we would come to a contradiction by finding the same "such and such" some other meaning.

There are enough numbers, as well as points (abstract space), geometric shapes (figures), or graphs (with nodes and connections), relations, or forms in general (whether they are known), for bijections with "essences".

Question: When they don't have a unique shape, then they don't have a shape — isn't that too bold a conclusion?

Answer: No, especially if it is an observation from the point of view of one and the same subject. We also saw him in the case of the cautious Louis de Broglie (1924). In his doctoral dissertation, he assumed that all matter also has wave properties, and he could get the idea from Fourier's theorem (1800).

It is a mathematical view that says that a “reasonably” continuous periodic function f (x) can be expressed as the sum of a series of sine or cosine terms, called the Fourier series, each of which has specific “amplitudes” and “phase” factors known as Fourier coefficients (Quantum Mechanics, p.247).

It was later shown that the pieces of an arbitrary function can approximately, but with every pre-given accuracy, represent any such function, and that such are precisely real trajectories. In other words, the places of the paths of the real particles can be anything, they do not have a unique shape and the only step from that is the conclusion that they do not have a shape.

As we know, for De Broglie's type of matter, Schrödinger (1926) formed his now famous partial differential equation of the second order. Schrödinger's wave equation proved to be one of the most precise tools (quantum mechanics) that physics had until then, and the disputed waves as probability waves.

Nearli

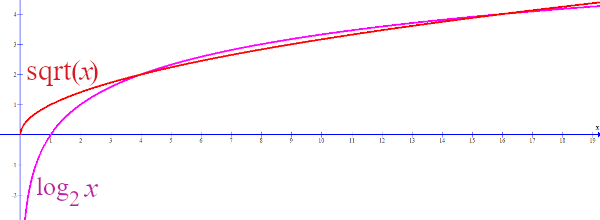

Question: Why do you sometimes use the root instead of the logarithm for simple information?

Answer: Because they are almost equal, at the interval where I use them. The root of four is two, sqrt(4) = 2, as well as the logarithm of base two, log2 4 = 2. It is the same with the root and logarithm of number 16, whose values are 4. For x, i.e. variable between, from about 4 to slightly after 16, the values of these two functions are approximate, as in the following graph.

The figure shows the mentioned approximation, log2 x ≈ √(x), for all x ∈ (4, 16) which are also the most common values of the number of options in the microworld. That is why things are happening there that are very unusual for the macro world, above all the extreme ignorance and uncertainty about almost all the phenomena around.

The result of the deficit of information in its smallest packets, in the quanta of action, is a drastic change of their time, the jump forward with each realization of a random event, relatively large in relation to our point of view, because the number of outcomes defines the speed of the time flow (Space-Time).

And it is precisely the fact, that almost everything to them is unpredictable, that strengthens their isolation. In accordance with the principle of minimalism of information, their tendency to form cycles, to fall into the "traps" of periodicity, will be extreme. Wanting to disappear they will constantly re-emerge, making quanta, and from our point of view the stable, balanced smallest packets (Periodicity).

Plants inhale carbon dioxide (CO2) by releasing oxygen, as opposed to us and animals that inhale oxygen and emit carbon dioxide. It is a biological balance, one of many that exist in the macroworld, but not nearly as represented as in the microworld.

A photon, a particle of electromagnetic radiation, and light, has position, time, electric field which induces a magnetic field by movement, and this reverse and again, first, traps this particle in a wave which constantly repeats its primitive processes. Its modest knowledge, the number of options x, is just an opportunity for the above approximation of the logarithm of this variable by the square root. And that is that.

Lenity

Question: People are becoming more tame?

Answer: The observation that humans are "domesticated" far precedes the idea that we have evolved. From ancient times we have described ourselves as polite, unlike the creatures of the wild. It is actually an assumption about the process of selection against aggression, like domestic mammals, which was self-induced (Self-Domestication) in humans. That people are evolving into tame ones — there is still a hypothesis in official science, together with the "information theory" where that follows as the thesis from "principled minimalism".

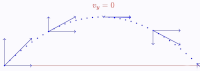

The effect of this principle, the least information (action), can be compared with the permanent gravitational force on the ground, constant acceleration (g = 9,81 m/s2), which reduces the initial velocity of the oblique shot, as in the picture on the left.

We know that the initial velocity of an obliquely thrown stone is the vector v = (vx,vy) with two components. Ignoring the air resistance, we find that the first component (vx), which represents the horizontal velocity of the stone, remains steady during the further flight, while the second, vertical (vy), decreases by gt, where t is the elapsed time. The result is a parabolic trajectory of a given image.

Life possesses an excess of information like a storm that possesses an excess of action, and which the principle of the least information (action) consumes slowly, on a daily basis, hardly noticeably, but persistently. In a way reminiscent of the aforementioned gravitational.

Living beings renounce their surpluses both for the benefit of the inanimate matter around them and for the benefit of the organization to which they belong. They thus lose personal freedom (amount of options, actions), and in another case they contribute to the collective in the same amount of their appropriate losses. To the extent that they lose from the intellectual, the range of personal freedoms, options and actions, the community gains.

Brain

Question: Have you seen this?

“A surprising fact about humans today is that our brains are smaller compared to the brains of our Pleistocene ancestors. Why our brains have reduced in size has been a big mystery for anthropologists,” explained co-author Dr Jeremy DeSilva, from Dartmouth College. — The article (human brains decrease) and a question send me a colleague.

Answer: Yes, recently and second hand, thanks for the original!

The attachment is a confirmation of expectations from information theory. By organizing, we surrender freedom (the amount of options, information) to the collective, in accordance with the "principle of minimalism of information" and at the same time we evolve into less intelligent ones.

However, before me, it was noticed that tame animals have smaller brains. Domestication reduces the brain, but questions remain as to what extent it affects intelligence, the type of intelligence, and whether it is possible to somehow have it both ways. Of course, no one but me associated it with the "transfer of freedom from the individual to the collective."

Components

Question: What does information "consist" of?

Answer: If you mean classic, technical information, the right place to look for answers is an encyclopedia. In Britannica, picture on the left, for "5 Components of Information Systems" are listed: hardware, software, telecommunications, databases and human resources with procedures.

If you want an answer from my theory, then information, above all, consists of subject and object. It is a number like S = ax + by + cz + ..., which is a scalar product of the vectors, or arrays (a,b,c,...) and (x,y,z,...), something like "Limbo", the so-called a place between heaven and hell (in a figurative sense, of course), which does not exist without both, the subject and object. To continue, you can read about "Information Perceptions".

Question: Do I have another way of treating information?

Answer: Yes. As information is a measure of the amount of options, its first division could be into "known" and "unknown" options. There are no absolutely known options and therefore quotation marks. For the criterion of "familiarity" should be a reference, a subject without which that determinant does not make sense.

So, if we have a subject whose known options define a series (a1,b1,c1,...), and unknown (a2,b2,c2,...), for the same previously mentioned components of the object, then it is (a1,b1,c1,...) + (a2,b2,c2,...) = (a,b,c,...), that is a1 + a2 = a, b1 + b2 = b, c1 + c2 = c, ... which is in accordance with the law of information conservation.

Question: Is it possible to have a compound of something that "does not exist"?

Answer: There is not enough heat to start a fire, more combustible material and oxygen are needed. If all the ingredients come together and something unlike them is created. It is similar with "perception information".

Fire is neither paper nor oxygen, just as information perception is not one of coupled, say abilities and limitations. However, the sub-question (by the way, I was asked) whether there are ingredients for that information, somewhere in a universe, is a matter of fantasy, but also of mathematics.

If phenomena such as the "ability" of subjects (to overcome the "limitations" of objects) with which they can be connected, which we call "information of perception", meet otherwise very strict truth criteria of the logic of mathematics itself, then the theory of them will also be a branch of mathematics. And then it is possible for science to accept or discover "universes" of such phenomena, because there are probably no such branches of mathematics that we do not discover over time as a strong basis for some reality.

Reciprocity

Question: Is there for the "information of perception" some special, convenient form and interpretation for game theory?

Answer: Probably, depending on the game. In the book "Physical Information", I modeled it so that the law of conservation applies to it, and in addition, that it looks as more like as possible to the classic, I also call it technical information, as prescribed by Shannon (Claude Shannon, 1948).

In accordance with game theory, at least as far as its most promising competitive strategy is concerned, roughly speaking "eye for an eye, dear for kind" (Tit-for-tat), information of perception, S = ax + by + cz + ..., could we treat as a clase of “reciprocity”. Then the first coefficients, say supply, are the components of the vector u = (a,b,c,...), and the second are the demand, the components of the vector v = (x,y,z,...). A balanced market will be one that has the maximum "information of perception", a scalar product of the given vectors, S = u ⋅ v.

The components of these vectors should then consistently represent some "intensity" of trading. Higher supply goes with higher matching demand and vice versa, lower with lower. The opposite of a successful economy would be a situation where there is little demand for large supply and high demand for small supply. It is clear that the scalar product (S) interpreted in this way follows the alleged market value with its value.

Demands for different goods and services can be different, so are the optimal offers. Then we have the "inequality" of the components of individual vectors, which brings us back to the previously discussed relativity of the meaning of information. The extreme value of the information we obtain with equal probabilities of the outcome of a given distribution will be inconsistent with the maximum "larger with larger and smaller with smaller", unless we adopt the previously mentioned relativity of perception.

The alleged inequality is then a matter of calibration, the use of different measures for different needs, in different situations. When, for example, the supply vector u finds itself in a situation of different demand v' when even better effects of trade could be achieved, there will be an association and even adaptation for greater information of perception S' = u ⋅ v'. This creates new connections and new optimums that redefine the notion of equality.

According to this, a more vital market is one within which exchanges of money, goods and services circulate better, that is, a more vital competitive game is one in which there is more "furiousness" of good moves between participants. The terms supply-demand then become an attacker-opponent, and both situations still formally correspond to the earlier interpretation: ability-limitation.

Efficacy

Question: Why do you think efficiency is the opposite of information (Dogma), are you sure?

Answer: Efficiency is the opposite of communication, so that more of the first means less need for the second, and that is a principled phenomenon that also applies to the physical universe (Regulation). More information in the transmission no longer means "of the same message", but of diversity and unpredictability. In this theory, greater communication is also greater exchange of energy over time, or momentums along the way, so greater efficiency means calming, and is in accordance with the principle of least action of physics (Action).

It is easier to learn formulas in mathematics than to discover them, and that is the same law that makes it easier to encode than decode, which is why half-truths (hidden information) flow between us more easily than the truth, and why more likely outcomes are more common.

Consistent with that, we write protocols in case of fire, earthquake, war danger, to shorten panic, wandering and unnecessary actions when the need arises. Anticipation and regulation are similar to routine work in terms of greater efficiency, i.e. reduction of unnecessary losses of energy and time, i.e. a tendency similar to inertia — to reduce actions and, therefore, communication.

That is why market supply and demand (Reciprocity) are spontaneously adjusted in order to become routine and lose the scope of communication, because the principle of least action (reduction of information) also affects them. They tend to lose their market vitality and need to add information, additional cost, to maintain and increase market dynamics, the flow of goods and services, vigor.

This "strange" organization of free networks is actually a consequence of their greater efficiency. Hence the attitude of a small number (six) steps to connection, i.e. the loss of market efficiency that occurred in cases of known state interventions, like state socialism (Information Stories, 1.6 Equality).

Options

Question: Is there more uncertainty in many known options or in a few unknown ones?

Answer: Complicated question, but undoubtedly interesting. It would be useful to know the answer, but it could rather be responses from some meticulous systematists, statisticians. If that happens, I believe, they will notice that the "unknown" options are also present in the "known" ones, and that the asked question makes sense.

Namely, as in the story of the hunter who hunts his prey with a trick and, knowing more, succeeds, we are all sometimes hunters, sometimes prey, sometimes more and sometimes less informed. After all, we communicate also because we do not have all the (information) we need. Uncertainty is therefore both objective and relative, which makes this problem more difficult.

For example, for asset, it is said "without risk there is no profit", which makes money have a "male" sign (Emancipation), that companies more tolerant to risk, to freedoms regarding profit, have more successful economies. Should. Also, that a "feminized" (Information Stories, 1.4 Feminization) society could find its happiness in other ways of living.

Another example is the history of mathematics, philosophy or science, which was initially a story of risky, ostentatious, ungrateful and generally "masculine" phenomenon. In the sequel, it is (yet to be) "feminized". No matter how incredible it seemed to us today, science will slowly lose the aspect of "rushing towards the unknown" and become more and more safe, perhaps imperceptibly to contemporaries. It will turn to its future values, which are efficiency, usefulness, less futileness, and more well-trodden and tested.

If this is already happening, for example with the "best" scientific journals and in general ways of measuring scientific ratings, then even without the above-mentioned statistics, I predict that we have less uncertainty in many options of occupations or jobs known today, than in some but unknown ones.

Originality

Question: If lies make information more attractive, then what attracts scientists and mathematicians to seek the truth?

Answer: A fun and instructive question. Learning the laws of nature and the attitudes of mathematics is easier than discovering them, so we prefer to just learn and follow them. But the principle of (minimalism) information is also hidden in discovering new things, at least in the effort to "have an easier part tomorrow". Also, there are reasons from the attachments (Dogma) that you mention.

Namely, when an explanation of another person leaves an impression on us, about something we did not know before, we will later defend it as if it were ours. We often witness that blind defense of the "truth" of a person who seems to be immune to all arguments, deaf and blind to reasons. That is why an excessive lie is also unfavorable.

They are the subject of the "Theory of Stupidity", attached, to which this explanation of mine could be attached. During Nazism, Dietrich Bonhoeffer watched "ordinary" Germans, citizens of his own country who were once thinkers and poets, turn into collective cowards, thieves and criminals.

He determined that stupidity is more dangerous than many things, because while "one can protest against evil; it can be exposed and prevented by the use of force, we are helpless against stupidity. Nothing is achieved here either through protests or the use of force. The reasons fall on deaf ears."

That is why we are sometimes tempted to overcome our own fears of uncertainty and to find some new truth, to give ourselves a break by defending it like the blind, rejoicing in the knowledge that "our truth" is irrefutable. However, for the most part, behind the effort of discovery lies the desire for relaxation, the final surrender to the laws of laziness, and what remains is the submission of excess information to the law of conservation, i.e. curiosity of intelligence and the need for temptations instead of boredom.

Boss

Question: Why are there so many incompetent bosses everywhere?

Answer: Frequently asked question and common (my) answer. Before that, the closest I could googled is this in the attachment (Incompetent Bosses). But let's be clear, the nearest star to our Sun is still very far away.

The deepest reason for subordination is the (general) principle of minimalism of information. Just as all systems of the known and unknown cosmos "aspire" to states of higher probability, they equally "prefer" to realize states of lesser information. These are conditions with fewer options, more limitations of routine, safety, efficiency. That is why the servant and the master are subordinate to each other (by mastering you also may reduce your options).

The reason is spontaneous, natural, mild but persistent, the tendency to avoid excess risk, actions, information, and excuses are various needs (economic, military, emotional). When in that combination of superior/inferior, both sides manage to get rid of the excess uncertainty — the coupling succeeds.

By the way, the situation is the same with companies. They do not need leadership for the sake of some "competence", but also as a glue of principled minimalism of information. That is why a boss is often needed who wants to command less matter to whom, then one who would not lead but work. The need for security, that is, stability here is versus uncertainty.

That is why the work environment of "incompetent bosses" is more morbid than vital, more obedient and less creative, because everyone in the structure "suffers" from the same urgencies. Loosening the rigidity of the bosses in order to release the originality of the workers risks the disintegration of the system for the sake of the competitiveness of the company itself.

Question: Why are there so many incompetents in public administrations?

Answer: Democracy is Eldorado for such. It is known that extremely intelligent people are not popular, but even those who are a little less extremely capable have lower chances in elections, as well as those who are below the level with which the masses are easier to identify.

It is good for the elected officials not to surround themselves with potentially dangerous competitors, because of "everyone's equal right to vote and to be elected." Anyone whom the position could made more visible can endanger them, so seemingly the best strategy is to surround yourself with the worse and the shortcomings that can be hold on to the reins. Obligations to the deserving in the time before the election are an additional burden.

Private companies stand on the right of property, which protects the owners from the competition of ordinary workers, but does not protect their deputies either (that is why managers are often not among the most capable, in addition to the above). But, not to be confused, the former right to rule on the basis of blood origin, kings and nobility, also had its drawbacks.

Levelness

Question: What are the consequences of insisting on equality?

Answer: Uh, there are so many that I can't believe we want to list them. Moral and competence issues are a small part of that spectrum (The Equality Conundrum) and no less important, but for now I will only stick to the narrower part of my information theory, the one that goes with the thesis that "nature does not like equality" (Equality).

Living beings, as aggregates of possibilities, have greater vitality in equal chances (when the information is greater) and that is good for development, but nature will reciprocate with the principle of least action.

For example, the legal system will become more complex and expensive, in the conditions of the free market the gap will arise along the lines of (non) holders of money, power, in the conditions of psycho-physical equality along the lines of possible psycho-physical differences. In general, events will spontaneously go towards the stratification of everything where equality is insisted on.

Then there is "feminization," (Information Stories, p. 15) or the growth of entropy. I use the first term, because physics still teaches that the growth of entropy is the growth of information, which according to my theory are the opposite changes.

Like grains of sand on the beach, whose molecules mostly deal only with their internal movements, and occasionally and reluctantly move to a breath of wind or water, the society of equality over time becomes self-centered, externally static. Individuals becoming stupid, separate similar ones in the specialty, become very obedient and generally evolve into living individuals with minimal information for the sake of the whole.

Question: Do you have a modern example?

Answer: Insisting on the equality of male-female relations today leads to the declaration of the sex of a person on the basis of free will, simple feeling, experience of oneself at a given moment. It is done with the aim of equalizing the rights of women with men. But, for example, in sports, with the transfer of a male competitor to a female competitor, the possibility of real biological women to win medals decreases.

Question: How would a numerical explanation go?

Answer: If you mean the classical Shannon information, it is known that his information function S = -p1 log p1 - ... - pn log pn has a maximum value when the probabilities are equal, p1 = ... = pn. By the way, it is a special case (almost, Physical Information) of the information perception, S = ax + by + cz + ..., so we can say that the same is true for this one.

In the general case, the perception information, for two or three items, I will demonstrate another effect. When the abilities are equal to each other, as well as the constraints, there will be S' = (a+b)/2, or S" = (a+b+c)/3, and then S' > S" whenever a + b > 2c. In other words, for a sufficiently small c < (a+b)/2 information perception will decrease by stratification, by increasing the number of divisions, even in the case of maintaining the equality of the first factors.

Based on the principled minimalism of information, a new factor will emerge spontaneously with a value less than the arithmetic mean of the previous ones, and this is easily achieved, especially considering the importance of the existing ones.

Slavery

Question: Why do you think it could be a bad undercut of billionaires?

Answer: Read and first try to understand the article "Value", about the taxation of higher income.

In short, because a free market like free networks (mathematical graph theory) becomes more efficient when it separates a small number of nodes (hubs) with many links from a large number of nodes with poor links. By intervening, making the network less free, we also make it less efficient. But who knows what is actually better for us, rapid economic development or something else?

Question: Inequality is the driving force behind the development of society, that’s how I understood the explanation of the "taxation of higher income", and it causes instability that can tear society apart. Do you have anything to add?

Answer: Both, and especially the latter, are only at first glance. We like to think that we are not a "slave-owning society" (although to a large extent we are) and we do not appreciate that, so accepting inequality could be said to be risky. But remember the saying that a satisfied slave is the greatest opponent of liberation from slavery.

Otherwise, we are in a pressure between the spontaneous reduction of information (freedom) and the law of its conservation. Now I’m talking about (my) information theory. As living beings, we have information in excess, which is why dead matter defeats us in duration and why we actually (secretly) wish for that victory. Therefore, we gladly replace freedom with safety, efficiency, comfort; we voluntarily surrender the surplus of opportunities with any convenient excuse and, among other things, that is why it is possible to subjugate the masses.

Slave-owned human societies would not exist without it. I don't discover hot water if I notice that it didn't last simply because we are the kind that developed too fast for the slave-owning economy. Classical slave-owning societies could not compete with modern technologies, production improvements, in making a profit, in the thirst for change. Here, the principle of minimalism is defeated by our excess vitality (risk, action), unlike other biological species.